Introduction

Artificial intelligence (AI) has become an integral part of daily reality, already having a significant impact on various fields of our lives – industry, medicine, education, business, etc. Designed to simulate human cognition and behavior and intelligence, AI tools help address the problems of education, diagnosis and treatment, production and marketing. At the same time, it should be noted that people do not immediately understand and accept such revolutionary innovations, experiencing distrust and concern at the rapid appearance of new technologies: the invention of the automobile, the building of nuclear power plants, cloning, etc. Due to fear and ethical considerations, some innovations were not put into wide production, despite their undoubted usefulness. Therefore, the introduction of innovations should be accompanied by a preliminary or at least parallel study of people's attitudes towards new ideas, their acceptance, and comfortable use.

Given the growing influence of AI in education, many researchers (Choung et al.,2023; Kelly et al., 2023; Wang et al., 2021) have begun to study the factors influencing the attitude of subjects of the educational process toward various AI devices and tools: chatbots, AI agents, neural networks, AI-based assessment systems, etc. In this study, we do not focus on a specific AI tool, but rather explore students' general acceptance of artificial intelligence. Understanding students’ views and intentions is important because these determine the direction, scope, and effectiveness of AI use in higher education.

Researchers report that adaptive learning with AI improves students' test scores by 62%, increases overall academic performance by 30%, and reduces anxiety by 20%. Numerous AI applications, which intertwine classical educational theories and the latest technological advances, are being developed to support the work of teachers and students (Chassignol et al., 2018; Doroudi, 2023; Perrotta & Selwyn, 2020). Along with this, the range of ethical issues related to the use of AI in education and ensuring its transparency and accessibility is expanding (Han et al., 2023).

As mentioned above, a person's acceptance of the emergence and widespread adoption of AI largely depends on their attitude toward AI, assessment of its positive and negative sides. Attitude, as a powerful predictor of behavior, influences whether a student will trust AI technologies and use them to improve academic achievement and rationalize many university procedures. Misunderstanding of the principles and denial of AI's usefulness, as well as the inevitability of its spread, can lead to resistance to AI adoption due to distrust, hindering its effective use.

The adoption of AI tools depends on cognitive acceptance, a positive emotional response, and a behavioral intention to introduce AI into daily life, learning, or work. Additionally, several specific factors influence the adoption of AI technologies, depending on the context of use, environmental conditions, and individual factors. The issue of positive factors and barriers preventing the perception of AI as a necessary and important tool for improving learning effectiveness remains unresolved. It is also necessary to divide the available factors into environmental and individual predictors of AI acceptance. Additionally, this systematic review will help categorize the AI context discussed in each article. Identifying and classifying the factors of AI acceptance is crucial for synthesizing the available literature to understand how students from different cultures, ages, genders, fields, and educational levels perceive AI, and to determine the nature of this phenomenon.

Although there have been several systematic reviews of the use of AI in education, many of these studies have focused solely on specific applications or contexts. For example, Kelly et al. (2023) aim to study the design of research and the conceptualization of AI in higher education. At the same time, the issue of other sociodemographic characteristics (age, gender, level of education, etc.) remains not fully disclosed. Mahmood et al. (2022) studied AI in the focus of ethics and ethical constraints.

Some authors limit themselves to studying the AI acceptance within the framework of specific models (Acosta-Enriquez, Ramos Farroñán, et al., 2024), the field of education (Preiksaitis & Rose, 2023; Salas-Pilco & Yang, 2022), AI tools (Albayati, 2024; Ipek et al., 2023), and the learning format (Ouyang et al., 2022). Other authors focus on the discursive constructs of artificial intelligence in higher education and answer the question of how the term "artificial intelligence" is used in the relevant literature (Bearman et al., 2023).

In addition, the methodological approaches of researchers differ, mainly considering AI as an independent variable that contributes to the learning process (Buchanan et al., 2021; Ipek et al., 2023). We have not found any studies where AI would be considered as a dependent variable influenced by other external and internal conditions and factors.

Bond et al. (2024), in a meta-systematic review, analyze in detail the types of existing reviews, areas of application, and gaps in reviews. The authors emphasize the need for greater consideration of ethics, methodology, and context in future research.

Taking these gaps into account, we set out to review the existing research and identify key factors influencing the adoption of artificial intelligence by university students, which, in turn, will increase the accessibility of AI to various categories of students and the effectiveness of its implementation in higher education. This review will present the characteristics of research examining the perception of AI technologies and the key factors reported in various studies that predict the adoption of artificial intelligence systems across different educational sectors, enabling stakeholders to better understand the factors influencing students’ acceptance of AI.

A group of research questions was formulated to structure the review.

RQ1: What are the methodological approaches and type of design of the available research investigating students’ acceptance of AI?

RQ2: What are the most common ways of using AI in higher education?

RQ3: What are the positive and negative factors contributing to AI acceptance?

RQ4: How does AI acceptance vary in different sociodemographic contexts?

Methodology

Search Strategy

This review used a checklist of the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) (Page et al., 2021).

Two reviewers searched ScienceDirect, Web of Science, Scopus, PsycARTICLES, SOC INDEX, and Embase in September – October 2024. These databases were selected to reflect the interdisciplinary nature of AI adoption, which covers various fields of research. The terms “artificial intelligence” OR “AI” OR “neural networks” OR “machine learning" OR “deep learning” OR “cognitive computing” AND “AI acceptance” OR “artificial intelligence acceptance” OR “AI accept” OR “user accept” OR “students’ AI accept” in titles, abstracts, and keywords from journal articles were requested. The search was conducted on a ‘snowball’ basis, including additional relevant publications discovered through new links. The filters "Years", "Subject Areas", "Languages", and "Article Type" were applied. Books, book chapters, conference proceedings, editorial materials, and reports were not included in the analysis.

Screening and Selection

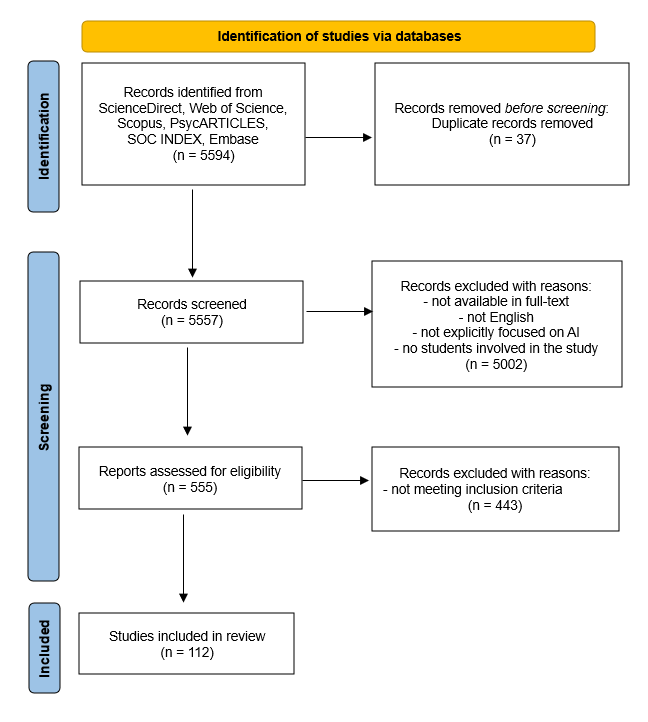

A total of 5594 articles were found at the identification step. All titles were entered into a Microsoft Excel spreadsheet, and after deleting 37 duplicates, 5557 entries remained. At the stage of selection, 5002 articles were excluded as they: (i) were not available in full text, (ii) were not written in English, (iii) did not explicitly focus on AI, (iv) the study was not related to university and college students. The two researchers independently read each article, cross-checked their work, and held a joint discussion to resolve any possible disagreements and biases.

The found records were exported to Abstrackr, a collaborative web-based screening tool designed to review abstracts. As a result of the initial search, 555 records were obtained, which formed the basic data set for the verification process for compliance with the inclusion criteria (Table 1).

Table 1. Inclusion and Exclusion Criteria.

| Inclusion criteria | Exclusion criteria | |

| Publication date | articles published between 2020 and 2025 | outside defined range |

| Language | English | not English |

| Participants | University and college students | other participants |

| Research object | AI acceptance in higher education | other research focus |

| Research question | AI is considered as a dependent variable or outcome | AI is not a dependent variable or outcome |

Given the rapid development of AI, we decided to analyze studies from the last five years, as many AI tools emerged and began to be actively used during this period, which means that the features of user-AI interaction are particularly pronounced and have been studied during this period (Kostikova et al., 2025). According to the purpose of our study, we selected articles in which the participants were university and college students, and AI was considered as a dependent variable influenced by external and internal factors. Studies in which students' acceptance of AI was not measured as the outcome or dependent variable were omitted. All possible variables that influence AI acceptance were analyzed and later organized into groups, with the results presented at various levels.

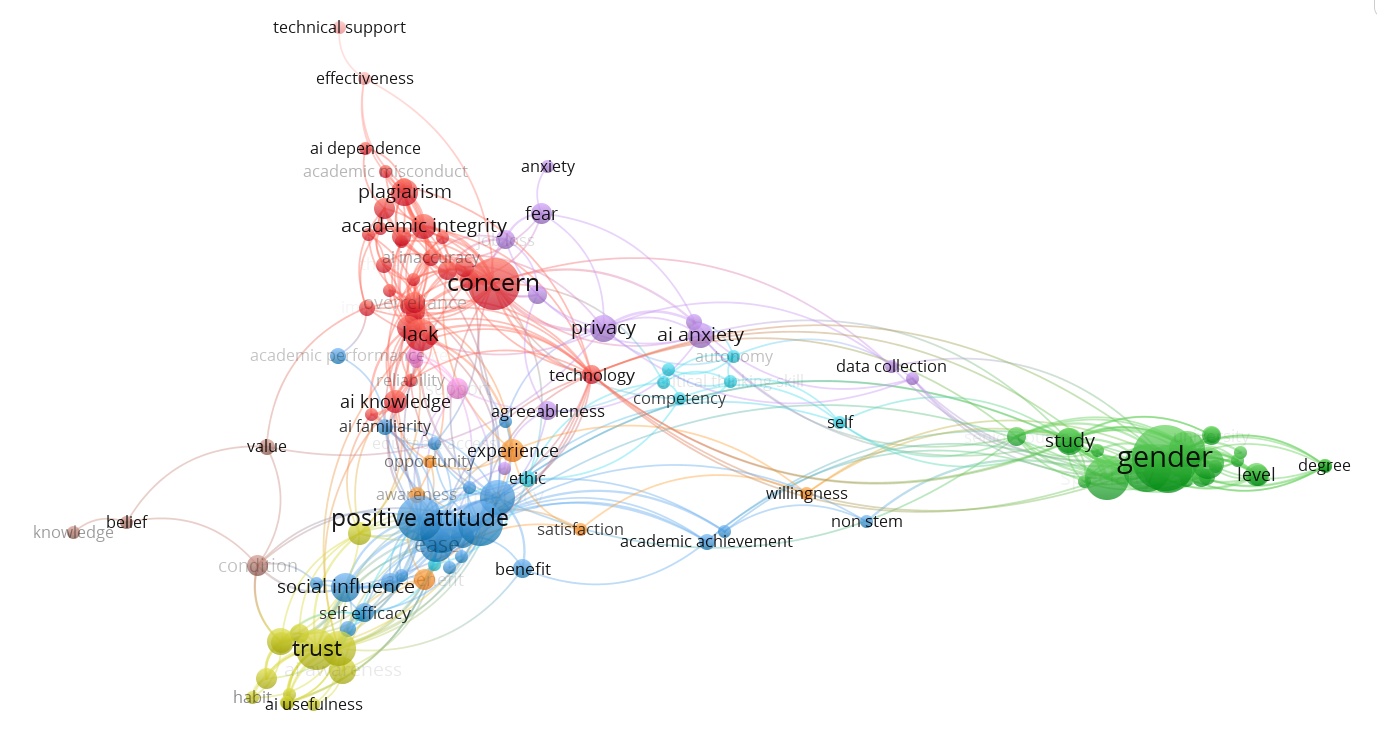

At the next step, based on a complete reading of all the articles, 443 articles were excluded because they went beyond the established criteria. Thus, 112 articles were selected for inclusion in this systematic review. The bibliometric map shows the most frequently used keywords in the selected articles (Figure 1).

Figure 1. Bibliometric Map According to the Keywords of Selected Studies

Coding and Data Extraction

To collect complete information about all the studies included in the review, we encoded them in a Microsoft Excel spreadsheet according to the questions. Based on the research questions, a coding scheme has been developed that allows you to extract data from each article. The following information was encoded as a dependent variable: research goals, objectives, design, research methods, and sample, years, and country of study, sociodemographic and personal characteristics of the participants. This process was carried out by one author, who organized the publications and assigned them codes. Following the recommendations of Creswell & Creswell (2018), the second author rechecked the work done to ensure coding consistency. The researchers then held a joint discussion to clarify the criteria used and reach a consensus. To identify theoretical and methodological categories, a content analysis was performed. After the extraction and encoding were completed, a complete table of all studies was compiled. The authors independently grouped the factors by levels and influence with a parallel analysis of the mediating variables. The collective discussion made it possible to eliminate discrepancies. The process continued until the authors reached a final agreement. To check the inter-rater reliability, the kappa statistic (McHugh, 2012) was used, which showed a substantial agreement (Cohen's k=0.74).

Results

Study Characteristics

The main steps of search, selection, and inclusion, the number of studies, and the reasons for exclusion are shown in Figure 2. All the papers were written in English and were published between 2020 and 2025. Most of the studies were conducted in one country: China (n =18), the United States (6), Saudi Arabia (6), and Germany (6). Peru (3), the United Arab Emirates (3), Turkey (3), and Hong Kong (3) are mentioned less frequently. Research was conducted in nine articles across two countries and in five articles across three countries. Five countries participated in 1 study. In four articles, the studies covered a cross-country perspective (involving 47, 63, 76, and 109 countries). In 1 article, the authors did not specify the country in which the study was conducted.

Figure 2. Flow Diagram of Literature Search

Of the acceptance models, the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT & UTAUT2) were the most used theories for evaluating the adoption of artificial intelligence tools (23, 12, and 6 studies, respectively). Three studies were conducted within the framework of Diffusion of Innovations Theory, Expectation-value theory,and Theory of Planned Behavior. Only one article mentions the Task-Technology Fit model. The Intrinsic motivation model, Trust Models in Human-AI Interaction, and Device user acceptance model were not used in any of the studies. The authors of 58 articles did not specify any model.

83 articles were found where the authors used quantitative methods (multiple regression, structural equation modeling, partial least squares (PLS), SEM-PLS, path analysis, hierarchical linear modeling, and others) and 13 articles where qualitative methods were indicated (text mining, interview, content analysis,and others). In 9 articles, mixed methods were used. Most of the data was obtained through surveys (90). 7 articles are devoted to review studies.

AI in Higher Education

In 58 articles, participants were asked to indicate in which areas of their educational life the use of AI could help them. Students report that they most often use artificial intelligence to find and clarify information (32) and to work with text (41): writing, editing, and translation. Additionally, technology enables students to plan their cases and refine their time management skills (10).

In 19 studies, students plan to use AI in scientific research. Most often, the search for suitable research (5), facilitation and support of scientific research (4), and assistance in choosing a research topic (3) were indicated. 15 studies describe the use of AI in solving specific tasks: programming (6), urban services (3), solving mathematical problems (1), disaster prediction (1), etc. In 5 articles, students are confident that AI will help their future professional development and career growth. Medical students report intentions to use AI in the future in the treatment of patients (22) and the diagnosis of diseases (15).

Factors of AI Acceptance

According to Kalinichenko and Velichkovsky (2022), there are organizational, sociopsychological, and individual factors that either promote or hinder the acceptance of AI. We have expanded the model proposed by the authors and included technological and competence levels. Table 2 shows the constructs that are indicated by the authors of the reviewed studies as factors influencing students' acceptance of AI.

Table 2. Identified Factors and Number of Studies

| Level | Factors Positive (n) | Factors Negative (n) |

| Organizational | Facilitating conditions (5), institutional policy enabling the use of AI (1), timeliness and availability of educational and technical support (11), learning resources (2), alignment with educational goals (3) | Lack of resources (2), a lack of perceived AI availability (2), lack of educational and technical support (2), institutional policy prohibiting the use of AI (1) |

| Technological | enhancing learning and research process (2), perceived comfort and ease of use (14), perceived usefulness (25), effort expectancy (5), positive impacts on productivity (1), perceived benefits, strengths and advantages of AI (12), AI quality (3), functionality (1), flexibility (1), convenience and compatibility (1), trialability, accessibility, perceived interactivity, non-maleficence, autonomy, transparency, and intelligibility (1), feedback and assessment quality (1), opportunity to improve human lives (1) | Concerns about the quality and effectiveness of AI technologies: misinformation and malfunctions (1), perceived cost (1), perceived risk (1), no authenticity and flexibility (1), inaccuracy (2), technical difficulties (1), limitations of technological accessibility (1), concerns about relevance of content provided (2), AI mistakes (2), lack of reliability (2).Cybersecurity threat: concerns about data privacy during data collection (1), storage, and sharing (1), cybersecurity attacks (1), manipulation of AI-based systems (1) |

| Social | Social influence (9), sociability quality (2), social interaction, social norms (2), supporting social environment (2), perception of AI as cooperation partners for humans (1) | Concerns about ethics: lack of policies and guidelines equity (3), need for strong regulation (3), replacing humans with AI (4), job loss (4), the threat to academic integrity and academic misconduct (6), less human interaction (1), negative attitudes toward AI ethics (2), rising inequality and AI discrimination (1), potential misuse (1), invasion of privacy (1), resistance to adopting technology (1), concerns about future AI use (1) |

| Individual | Positive attitude towards AI (22), trust in AI (17), values and beliefs (2), positive emotions and favorable feelings related to AI (3), hedonic and academic motivation (8), habit (2), perceived behavioral control and subject norms (2), agreeableness (3), innovativeness (2), honesty–humility (2), openness (2), interest in AI (3) | Negative emotions related to AI: negative attitude towards AI (2), AI anxiety (2), susceptibility (1), fear about recent AI development (1), neuroticism (1), fear of total control by AI (1), laziness (2), AI resistance (1).Well-being concerns: distrust (1), AI dependence and overreliance (1), negative impact of AI on human emotion (1), reduced autonomy (1) |

| Competency | AI literacy (12), prior AI experience (20), prudence, technical savviness and readiness (8), AI ethics awareness (3), AI self-efficacy (11), relevant and efficient digital skills (2), performance expectancy (8), self-directed learning competency (2) | Reduced creativity (3), reduced social and critical thinking skills (2), negative impacts on efficiency (1), negative impact on learning competencies and outcomes (1), prior negative experiences (1), performance failure (1) |

When calculating the factors, we analyzed each of the research results to establish the level to which the factor belongs. At the same time, we considered the presence and magnitude of correlations of each factor with the adoption of AI to find studies where the magnitude of this relationship is insufficient. According to Cohen's (1988) recommendations, we evaluated the relationship as trivial, small, medium, and large, respectively. Figure 3 shows the numbers and distribution of identified factors at different levels.

Figure 3. Factors of AI Acceptance

A systematic review identified the largest number of factors influencing AI acceptance, with a moderate to significant effect, at the technological level (34 factors) across 88 studies. At the individual level, 25 factors were identified in 82 analyzed studies. At the sociopsychological level, 19 factors were identified in 45 studies. At the competence level, 16 factors were identified in 75 studies. At the organizational level, 29 studies have identified 9 factors.

Positive Factors of AI Acceptance

It turned out that the most important factors encouraging students to use AI are the technological characteristics of AI: perceived usefulness of AI (e.g., Kelly et al., 2023; Shahzad et al., 2024), perceived ease of use (e.g., Almogren et al., 2024; Lin & Yu, 2023), perceived benefits, strengths, and advantages of AI (Ivanov et al., 2024), effort expectancy (e.g., Camilleri, 2024; Kelly et al., 2023), etc.

Significant associations were found between the adoption of AI and the individual characteristics of students: positive attitude towards AI (e.g., Baek et al., 2024; Kwak et al., 2022; Sindermann et al., 2021), trust (Sadiq et al., 2025; Song, 2024), hedonic and academic motivation (e.g., Habibi et al., 2024; Shuhaiber et al., 2025), and others. Equally important factors are competencies in the field of AI: prior AI experience (Aladini et al., 2024; Alkhaaldi et al., 2023), AI literacy (Fan & Zhang, 2024; Hornberger et al., 2025), etc.

Social factors also showed lower significance coefficients: social influence (e.g., Albayati, 2024; Essien et al., 2024; Sallam et al., 2023), social norms (Wang & Zhang, 2023), supporting social environment (Acosta-Enriquez et al., 2025). The least significant correlations were found at the organizational level: timeliness and availability of educational and technical support (Jiang et al., 2024), facilitating conditions (Alzahrani, 2023; Waluyo & Kusumastuti, 2024), alignment with educational goals (Essien et al., 2024), etc.

Negative Factors of AI Acceptance

We found similar trends regarding the negative factors of AI adoption. The factors of the technological level showed the strongest effect: AI inaccuracy (e.g., Abouammoh et al., 2025; Ghotbi & Ho, 2021), concerns about the relevance of content provided (Mogavi et al., 2024), and AI mistakes (Ghotbi & Ho, 2021; Nazaretsky et al., 2025). Significant associations were found at the individual level (negative attitude towards AI (e.g., Baek et al., 2024; Hornberger et al., 2025), negative emotions (Lund et al., 2024; Rjoop et al., 2025), etc.) and social level (concerns about ethics (Kim et al., 2025), job loss (Hatem et al., 2024; Sindermann et al., 2021), threat to academic integrity, etc. Factors of competence level (Abouammoh et al., 2025; Mogavi et al., 2024) (reduced social and critical thinking skills, negative impact on learning outcomes, etc.) and organizational levels (Liu et al., 2022; Truong et al., 2023) have lower significance values (e.g., lack of resources, lack of educational and technical support).

The list of studies with identified factors is presented in Supplementary Table 1.

AI Acceptance in Different Sociodemographic Contexts

Several of the identified studies examined how AI acceptance varies among different groups of students. The analysis reveals that the authors of the studies reached contradictory conclusions on most of the studied indicators. Table 3 shows the number of studies describing sociodemographic variables that mediate the effects of AI acceptance factors.

Table 3. Variables Mediating the Influence of AI Acceptance Factors

| Variable | Mediates AI acceptance (n) | Does not mediate AI acceptance (n) |

| Gender | 39 | 10 |

| Specialty | 20 | 1 |

| Age | 15 | 12 |

| Country | 11 | 1 |

| level of education | 5 | 2 |

| year of study | 6 | 1 |

| Income | 5 | 0 |

| region (urban) | 3 | 0 |

| type of institution | 4 | 2 |

| Religiosity | 2 | 0 |

| low academic achievements | 1 | 0 |

The largest number of studies describe the specifics of AI acceptance in various gender groups, and the authors’ opinions on this issue differ. Some studies (26) report that gender has an enhancing mediating effect on the AI acceptance factor. It is reported that male students are more positive about AI (Adžić et al., 2024; Alam et al., 2023; Baek et al., 2024; Bisdas et al., 2021). Other authors write about the weakening mediating effect of gender (13). Females show less trust in AI technology (Sindermann et al., 2021), perceived ease of use (Kim et al., 2025), and perceived safety (Li et al., 2022). However, other authors (10) write about no association between gender and attitudes towards AI (Acosta-Enriquez, Arbulú Pérez Vargas, et al., 2024; Kaya et al., 2024; Montag et al., 2023).

The opinions of the authors also differ regarding AI literacy. Some of them (Bisdas et al., 2021; Cherrez-Ojeda et al., 2024; Elhassan et al., 2025; Truong et al., 2023; Yeh et al., 2021) write that males are more prepared and competent in terms of AI than females, while others (Elchaghaby & Wahby, 2025; Fitzek & Choi, 2024) are convinced that gender cannot predict AI knowledge and AI experience.

The same conclusions can be drawn regarding the perception of risks associated with the widespread adoption of AI technologies (Kaya et al., 2024; Lund et al., 2024). In only two studies, the authors write that anxiety about potential risks does not depend on the gender of students. Other studies have indicated that females demonstrate a lower level of perceived safety (Li et al., 2022; Nazaretsky et al., 2025; Yigitcanlar et al., 2024). Female students are more likely to support stricter regulation of AI (Bartneck et al., 2024; Yeh et al., 2021). The risk of losing their jobs due to AI displacing employees in the workplace is higher among the male part of the student sample (Baek et al., 2024; Ghotbi & Ho, 2021).

The frequency of using artificial intelligence technologies (Dolenc & Brumen, 2024; Rjoop et al., 2025; Sallam et al., 2023), trust in AI (Kozak & Fel, 2024; Sindermann et al., 2021; Yigitcanlar et al., 2024), performance expectancy and the perceived usefulness of AI in the male group are much more (Alkhaaldi et al., 2023; Lai et al., 2023; Ozbey & Yasa, 2025; Sallam et al., 2023; Sindermann et al., 2021). At the same time, Solórzano Solórzano et al. (2024) report that there is no significant relationship between gender, hedonic motivation (Sabraz Nawaz et al., 2024), and habit. In some cases, intention to AI use (Lund et al., 2024), users’ personal characteristics as a factor of AI acceptance (Stein et al., 2024), confidence in AI accuracy (Fitzek & Choi, 2024), and the ethical aspects of AI (Mwase et al., 2023) also do not seem to be related to gender.

On the issue of AI acceptance by students of different specialties, the authors demonstrate greater unity of opinion. Only one study reports that acceptance is independent of students’ specialty (Mwase et al., 2023). In other studies, the authors conclude that various aspects of student acceptance have their own specifics depending on their specialties (15 and 5 studies with different mediating effects). Students in STEM fields have a higher frequency of using AI (Baek et al., 2024; Kim et al., 2025), AI literacy (Acosta-Enriquez, Ramos Farroñán, et al., 2024; Yusuf et al., 2024), and a positive attitude towards AI (Baek et al., 2024; Kim et al., 2025). A high level of AI literacy is also noted among students of engineering (Kharroubi et al., 2024; Stöhr et al., 2024), technical sciences (Adžić et al., 2024), and applied fields (Qu et al., 2024), who have a stronger intention to use AI. Business students have a positive attitude towards AI (Adžić et al., 2024; Yeh et al., 2021) and a low level of concern about the possibility of total control over human lives (Yeh et al., 2021). The frequency of using AI, as well as confidence in its usefulness, is higher among students studying computer science (Dolenc & Brumen, 2024; Nazaretsky et al., 2025). Students of humanities, medicine, and social sciences have a higher level of concern about ethical AI issues (Ravšelj et al., 2025; Stöhr et al., 2024). Thus, the level of acceptance of AI technologies and the choice of ways to use them will vary among students of different specialties and fields of study (Fitzek & Choi, 2024; Ravšelj et al., 2025; Xu et al., 2024).

The authors' views on the age of students also differ, with some authors saying that age increases the impact of the factor (8), other authors reporting its weakening effect (7), and others writing about the absence of a mediating effect (12). Perceptions of the impact of artificial intelligence on the course of life and the ability to make decisions are growing as the age of the respondents increases (Yeh et al., 2021). AI literacy (Kharroubi et al., 2024), the requirement for stricter regulation (Bartneck et al., 2024), and a positive attitude towards AI (Baek et al., 2024; Cherrez-Ojeda et al., 2024) are also more common among older students. Concerns about the risks posed by AI (Lund et al., 2024; Yigitcanlar et al., 2024), specifically concerns about unemployment and total AI surveillance, are more pronounced in the older age group (Yeh et al., 2021). At the same time, older students have less confidence in AI (Yigitcanlar et al., 2024).

The opposite point of view is expressed by the authors who claim that factors such as concern for ethics (Mwase et al., 2023), social influence (Tao et al., 2024), trust in AI (Alkhaaldi et al., 2023), the intention to use AI tools (Mustofa et al., 2025; Sallam et al., 2023; Solórzano Solórzano et al., 2024; Xu et al., 2024), as well as attitudes towards AI (Acosta-Enriquez, Arbulú Pérez Vargas, et al., 2024; Chen et al., 2024; Fitzek & Choi, 2024) do not depend on age.

It was found that the year of study has a significant impact on AI acceptance; however, this variable is represented in only 6 studies. For example, AI literacy (Kwak et al., 2022;Xu et al., 2024), the frequency of use (Stöhr et al., 2024), and trust (Kozak & Fel, 2024) are higher among senior course students. The level of self-efficacy, which students rate as a property that helps them better use AI tools, and a positive attitude towards AI technologies are also more developed among senior course students. At the same time, concerns about the ethical aspects of AI acceptance are lower (Kwak et al., 2022; Ravšelj et al., 2025). First-year students are more sensitive to social influences in terms of AI adoption than senior students (Elchaghaby & Wahby, 2025). The most significant discussion revolves around perceived reliability, trust in AI technologies, confidence in their security, and perspectives on the future development of AI technologies.

As for the level of education (5), the analysis showed that regarding a positive attitude towards AI (Bisdas et al., 2021) and AI literacy (Elhassan et al., 2025) the level of education has a reinforcing mediating effect, whereas in terms of positive attitude towards AI and opportunity to improve human lives (Yeh et al., 2021) has weakening effect. In two studies (Mwase et al., 2023; Sallam et al., 2023), the authors conclude that the level of education does not significantly contribute to the effect of the AI acceptance factor.

It should also be noted that students at private universities demonstrate different views on AI and different levels of acceptance of AI (4 studies report the presence of a mediating effect, 2 studies report no effect). They have a higher level of hedonic motivation and AI productivity. At the same time, a study reports that students at public universities have a higher acceptance of AI technologies (Cherrez-Ojeda et al., 2024; Espartinez, 2024). In addition, an important mediating factor is the university’s policy that allows or prohibits the use of AI (Baek et al., 2024; Li et al., 2022). However, in some studies, this relationship is not observed (Elchaghaby & Wahby, 2025; Sallam et al., 2023).

AI acceptance factors were found to be sensitive to the local context (12). Students from different countries demonstrate various levels of perceived usefulness of AI tools (Bisdas et al., 2021), AI literacy (Hornberger et al., 2025; Yusuf et al., 2024), self-efficacy (Hornberger et al., 2025), trust in AI (Kozak & Fel, 2024; Sindermann et al., 2021), and fear of technology adoption (Lund et al., 2024; Sindermann et al., 2021). Students express their opinions in different ways about their attitude to AI technologies (Bisdas et al., 2021; Hornberger et al., 2025), possible ethical problems of AI (Bisdas et al., 2021), discrimination in access to AI (Ghotbi & Ho, 2021), and increased control over the use of AI (Bartneck et al., 2024; Ghotbi & Ho, 2021).

In addition, students living in urban areas show less anxiety and difficulties associated with the use of AI and a higher level of preparedness for its use (Lund et al., 2024; Ravšelj et al., 2025) compared with students from rural areas.

Only in 2 studies did the authors report that students ' religiosity plays an equally important role in AI acceptance. Religious students feel more positive about AI (Kozak & Fel, 2024) but require stricter control over its use (Bartneck et al., 2024).

Interesting information is provided by studies that examine the financial well-being of students using AI tools (5). It turned out that AI literacy and attitudes towards AI are more positive among higher-income students (Alam et al., 2023; Baek et al., 2024; Truong et al., 2023). However, the frequency of AI use is higher among low-income students (Ravšelj et al., 2025). Low-income students are also more likely to voice demands for increased control over the use of AI (Bartneck et al., 2024). Finally, only 1 study interprets academic performance as a variable mediating the adoption of AI (Lund et al., 2024).

Conclusion

To summarize, this review shows that technological level factors have the most significant impact on AI acceptance. Depending on the specific conditions, technological factors can both strongly stimulate and restrain students' attitudes to AI and their intention to use it. Frequent inclusion of these factors is also found in previous studies (Al Farsi, 2023; Marlina et al., 2021; Salas-Pilco & Yang, 2022). The fact that the authors frequently mention trust, positive attitudes, and the perceived usefulness of artificial intelligence technologies highlights the significance of these factors. It should be noted that this information is confirmed in many other studies. For example, studying the perceived usefulness of technology (Arpaci et al., 2023; Keskin et al., 2016; Salifu et al., 2024), trust and positive attitude towards AI (Bilquise et al.,2024; Buabbas et al., 2023).

Personal characteristics of students (at the individual level) also make a significant contribution to AI acceptance, with a similar degree of influence. This is consistent with the conclusions of other authors, who emphasize the importance of these variables for understanding which factors influence the acceptance of artificial intelligence by users: expected success, self-efficacy, values, motivation, habit, and autonomy (Baudisch et al., 2022; Nikolopoulou et al., 2021). The next most frequently mentioned concerns are students’ fears that the introduction of AI will create a threat to the loss of skills and negatively affect personal development and psychological well-being. Specifically, educational motivation, creativity, and mental activity are expected to decrease, while procrastination, loss of identity, and dependence on AI are anticipated to arise. Similar data is reported by other researchers (Chakraborty Samant et al., 2024; Chang et al., 2023; Craig et al., 2019).

Organizational-level factors have the least effect. It should be noted that some authors came to similar conclusions in studies on other samples and found that AI acceptance is influenced by facilitating conditions (Ebadi & Raygan, 2023; Wang & Zhang, 2023; Zhao et al., 2023), university support (Er et al., 2021; Mamun et al., 2022), relevant learning goals and requirements (Nguyen et al., 2024; Walter, 2024).

The factors determining the adoption of AI vary depending on the student's gender, specialty, and country of residence.

Limitations

This review has several limitations related to both the review methodology and the included studies. The analysis of research methodologies reveals opportunities for future research development. Mixed research methods can significantly improve the reliability of the results. A significant number of empirical studies do not rely on any theoretical foundations when developing their research.

Articles were included in the review only if they were available in English, which prevented the inclusion in the study of a large volume of articles written in other languages. When searching for articles, the most important keywords were “acceptance of artificial intelligence”, without specifying their levels, technologies, and tools, which potentially minimized the amount of research. Most studies used only an online survey, which limited the number of participants and reduced the amount of information that could be obtained by combining research methods.

Some studies did not provide information about the exact number of participants or the countries where the study was conducted, which could reduce the accuracy of assessing cross-cultural differences in the results. More in-depth cross-cultural studies could reveal numerous differences that include a wide range of socio-cultural, political, demographic, infrastructure, and other factors. The preponderance towards developed Western countries may play a critical role in explaining the adoption of artificial intelligence in other countries. For example, some countries may allocate more funds for training in the use of artificial intelligence. Culturally, they may have different values or biases towards AI.

Due to the limited number of studies, we were unable to draw clear conclusions about the impact of education level, year of study, academic performance, student income, and institutional policy. No information was provided on whether the students were foreigners or indigenous. These areas may form the basis of new reviews in the future.

To some extent, the result will be distorted by the fact that not all areas of education are represented in the research. For example, more students of medical and technical fields participate in research than, for example, pedagogical or social sciences. This highlights the importance of expanding research in different educational contexts to gain a more global understanding of the factors influencing AI adoption.

TheoreticalImplications

This study contributes to the literature in several ways. First, it complements the reviews of AI in higher education by offering an updated overview of the factors influencing the AI acceptance in this field. Whereas in previous reviews, the general field of research or, on the contrary, specific narrow areas of its application were considered. In this study, we use both bibliometric and content analysis, which allows us to gain a comprehensive understanding of the currently available literature, design, models, and research theories.

Secondly, the ideas about the models of AI adoption in university settings are generalized. The study also enables us to better understand the complexity of perceptions and behaviors among various sociodemographic user groups.

Thirdly, the role of individual factors influencing AI acceptance has been clarified, which will enable the adoption of AI to be more personalized, considering the needs, attitudes, and personality characteristics of users.

Fourth, the study expands our understanding of the AI research landscape and identifies several areas that warrant the attention of researchers. For example, this is a more in-depth study of the social and organizational factors of AI adoption, which are poorly represented in the sample articles. It also highlights poorly understood areas, such as the introduction of artificial intelligence into other non-STEM disciplines and various cultural contexts, which opens new opportunities for future research.

PracticalImplications

The review conducted allows us to make several practical recommendations. Firstly, the results of this study are of great practical importance for higher education institutions seeking to effectively integrate artificial intelligence technologies into their educational and administrative practices.

The study highlights the problem of increasing digital literacy of both students and university teachers, which must be considered when designing educational programs at higher and postgraduate levels. It is important that universities develop clear guidelines for the ethical and responsible use of AI in the educational context, coordinate the implementation of AI, and create incentives for the implementation of AI in various disciplines.

Additional educational work is required on the issue of risks associated with AI (personal data leakage, cyber-attacks, etc.), their correct perception, and prevention. It is necessary to implement reliable data protection and privacy measures, as well as to inform the university community about the ethical and social consequences of AI.

Secondly, policymakers need to strengthen efforts to address economic and ethical barriers to AI technologies in different countries and adapt AI implementation strategies to different cultural and educational contexts. A thorough study and understanding of AI acceptance factors at all levels and in all contexts will help support vulnerable groups of students, ensure equal access to AI tools for all, and promote equality in education.

Third, by identifying the key factors influencing the adoption of artificial intelligence, this study provides valuable information for developing more effective and cost-effective implementation strategies. Understanding the concepts and attitudes towards AI can help in the development and implementation of AI applications and tools that meet the needs and preferences of students.

Understanding students’ views about AI can help stakeholders better understand where to invest their resources in developing AI technologies. Developers and users need to be aware of these issues and ensure the responsible development and implementation of AI tools. Advanced models can not only predict attitudes towards AI but also understand the reasons for its acceptance and rejection, enabling researchers and practitioners to carry out diagnostic and corrective measures.

Conflict of Interest

The authors declare no potential conflicts of interest.

Funding

This research has been funded by the Committee of Science of the Ministry of Science and Higher Education of the Republic of Kazakhstan (Grant №. BR21882302 Kazakhstan's society in the context of digital transformation: prospects and risks).

Authorship Contribution Statement

Mukhamedkarimova: design, analysis, drafting manuscript. Umurkulova: Editing, supervision.

Reference

Abouammoh, N., Alhasan, K., Aljamaan, F., Raina, R., Malki, K. H., Altamimi, I., Muaygil, R., Wahabi, H., Jamal, A., Alhaboob, A., Assiri, R. A., Al-Tawfiq, J. A., Al-Eyadhy, A., Soliman, M., & Temsah, M.-H. (2025). Perceptions and earliest experiences of medical students and faculty with ChatGPT in medical education: Qualitative study.JMIR Medical Education, 11(1), Article e63400.https://doi.org/10.2196/63400

Acosta-Enriquez, B. G., Arbulú Pérez Vargas, C. G., Huamaní Jordan, O., Arbulú Ballesteros, M. A., & Paredes Morales, A. E. (2024). Exploring attitudes toward ChatGPT among college students: An empirical analysis of cognitive, affective, and behavioral components using path analysis.Computers and Education: Artificial Intelligence, 7, Article 100320.https://doi.org/10.1016/j.caeai.2024.100320

Acosta-Enriquez, B. G., Guzmán Valle, M. Á., Arbulú Ballesteros, M., Arbulú Castillo, J. C., Arbulu Perez Vargas, C. G., Saavedra Torres, I., Silva León, P. M., & Saavedra Tirado, K. (2025). What is the influence of psychosocial factors on artificial intelligence appropriation in college students?BMC Psychology, 13, Article 7.https://doi.org/10.1186/s40359-024-02328-x

Acosta-Enriquez, B. G., Ramos Farroñán, E. V., Villena Zapata, L. I., Mogollon Garcia, F. S., Rabanal-León, H. C., Morales Angaspilco, J. E., & Saldaña Bocanegra, J. C. (2024). Acceptance of artificial intelligence in university contexts: A conceptual analysis based on UTAUT2 theory.Heliyon,10(19), Article e38315.https://doi.org/10.1016/j.heliyon.2024.e38315

Adžić, S., Savić Tot, T., Vukovic, V., Radanov, P., & Avakumović, J. (2024). Understanding student attitudes toward GenAI tools: A comparative study of Serbia and Austria.International Journal of Cognitive Research in Science, Engineering and Education, 12(3), 583–611.https://doi.org/10.23947/2334-8496-2024-12-3-583-611

Aladini, A., Mahmud, R., & Ali, A. A. H. (2024). The importance of needs satisfaction, teacher support, and L2 learning experience in Intelligent Computer-Assisted Language Assessment (ICALA): A probe into the state of willingness to communicate as well as academic motivation in EFL settings.Language Testing in Asia, 14, Article 58.https://doi.org/10.1186/s40468-024-00334-9

Alam, M. J., Hassan, R., & Ogawa, K. (2023). Digitalization of higher education to achieve sustainability: Investigating students’ attitudes toward digitalization in Bangladesh.International Journal of Educational Research Open, 5, Article 100273.https://doi.org/10.1016/j.ijedro.2023.100273

Albayati, H. (2024). Investigating undergraduate students’ perceptions and awareness of using ChatGPT as a regular assistance tool: A user acceptance perspective study.Computers and Education: Artificial Intelligence, 6,Article 100203.https://doi.org/10.1016/j.caeai.2024.100203

Al Farsi, G. (2023). The efficiency of UTAUT2 model in predicting students’ acceptance of using virtual reality technology.International Journal of Interactive Mobile Technologies, 17(12), 17–27.https://doi.org/10.3991/ijim.v17i12.36951

Alkhaaldi, S. M. I., Kassab, C. H., Dimassi, Z., Alsoud, L.O., Fahim, M. A., Hageh, C. A., & Ibrahim, H. (2023). Medical student experiences and perceptions of ChatGPT and artificial intelligence: Cross-sectional study.JMIR Medical Education, 9(1), Article e51302.https://doi.org/10.2196/51302

Almogren, A. S., Al-Rahmi, W. M., & Dahri, N. A. (2024). Exploring factors influencing the acceptance of ChatGPT in higher education: A smart education perspective. Heliyon, 10,Article e31887. https://doi.org/10.1016/j.heliyon.2024.e31887

Alzahrani, L. (2023). Analyzing students’ attitudes and behavior toward artificial intelligence technologies in higher education.International Journal of Recent Technology and Engineering, 11(6), 65–73.https://doi.org/10.35940/ijrte.F7475.0311623

Arpaci, I., Masrek, M. N., Al-Sharaf, M. A., & Al-Emran, M. (2023). Evaluating the actual use of cloud computing in higher education through information management factors: A cross-cultural comparison.Education and Information Technologies, 28, 12089–12109.https://doi.org/10.1007/s10639-023-11594-y

Baek, C., Tate, T., & Warschauer, M. (2024). "ChatGPT seems too good to be true": College students’ use and perceptions of generative AI.Computers and Education: Artificial Intelligence,7, Article 100294.https://doi.org/10.1016/j.caeai.2024.100294

Bartneck, C., Yogeeswaran, K., & Sibley, C. G. (2024). Personality and demographic correlates of support for regulating artificial intelligence.AI and Ethics, 4, 419–426.https://doi.org/10.1007/s43681-023-00279-4

Baudisch, J., Richter, B., & Jungeblut, T. (2022). A framework for learning event sequences and explaining detected anomalies in a smart home environment.KI – Künstliche Intelligenz, 36, 259–266.https://doi.org/10.1007/s13218-022-00775-5

Bearman, M., Ryan, J., & Ajjawi, R. (2023). Discourses of artificial intelligence in higher education: a critical literature review.Higher Education, 86,369–385.https://doi.org/10.1007/s10734-022-00937-2

Bilquise, G., Ibrahim, S., & Salhieh, S. E. M. (2024). Investigating student acceptance of an academic advising chatbot in higher education institutions.Education and Information Technologies, 29, 6357–6382.https://doi.org/10.1007/s10639-023-12076-x

Bisdas, S., Topriceanu, C.-C., Zakrzewska, Z., Irimia, A.-V., Shakallis, L., Subhash, J., & Ebrahim, E. H. (2021). Artificial intelligence in medicine: A multinational multi-center survey on the medical and dental students’ perception.Frontiers in Public Health, 9,Article 795284.https://doi.org/10.3389/fpubh.2021.795284

Bond, M., Khosravi, H., De Laat, M., Bergdahl, N., Negrea, V., Oxley, E., Pham, P., Wang Chong, S., & Siemens, G. (2024). A meta systematic review of artificial intelligence in higher education: a call for increased ethics, collaboration, and rigour.International Journal of Educational Technology in Higher Education, 21, 1–4.https://doi.org/10.1186/s41239-023-00436-z

Buabbas, A. J., Miskin, B., Alnaqi, A. A., Ayed, A. K., Shehab, A. A., & Syed-Abdul, S. (2023). Investigating students' perceptions towards artificial intelligence in medical education.Healthcare, 11(9), Article 1298.https://doi.org/10.3390/healthcare11091298

Buchanan, C., Howitt, M. L., Wilson, R., Booth, R. G., Risling, T., & Bamford, M. (2021). Predicted infuences of artifcial intelligence on nursing education: Scoping review.JMIR Nursing, 4(1), Article e23933.https://doi.org/10.2196/23933

Camilleri, M. A. (2024). Factors affecting performance expectancy and intentions to use ChatGPT: Using SmartPLS to advance an information technology acceptance framework.Technological Forecasting and Social Change, 201, Article 123247.https://doi.org/10.1016/j.techfore.2024.123247

Chakraborty Samant, A., Tyagi, I., Vybhavi, J., Jha, H., & Patel, J. (2024). ChatGPT dependency disorder in healthcare practice: An editorial.Cureus, 16(8), Article e66155.https://doi.org/10.7759/cureus.66155

Chang, D. H., Lin, M. P.-C., Hajian, S., & Wang, Q. Q. (2023). Educational design principles of using AI chatbot that supports self-regulated learning in education: Goal setting, feedback, and personalization.Sustainability, 15(17), Article 12921.https://doi.org/10.3390/su151712921

Chassignol, M., Khoroshavin, A., Klimova, A., & Bilyatdinova, A. (2018). Artificial intelligence trends in education: A narrative overview.Procedia Computer Science, 136, 16–24.https://doi.org/10.1016/j.procs.2018.08.233

Chen, D., Liu, W., & Liu, X. (2024). What drives college students to use AI for L2 learning? Modeling the roles of self-efficacy, anxiety, and attitude based on an extended technology acceptance model.Acta Psychologica, 249,Article 104442.https://doi.org/10.1016/j.actpsy.2024.104442

Cherrez-Ojeda, I., Gallardo-Bastidas, J. C., Robles-Velasco, K., Osorio, M. F., Velez Leon, E. M., Leon Velastegui, M., Pauletto, P., Aguilar-Díaz, F. C., Squassi, A., González Eras, S. P., Cordero Carrasco, E., Chavez Gonzalez, K. L., Calderon, J. C., Bousquet, J., Bedbrook, A., & Faytong-Haro, M. (2024). Understanding health care students’ perceptions, beliefs, and attitudes toward AI-powered language models: Cross-sectional study.JMIR Medical Education, 10(1), Article e51757.https://doi.org/10.2196/51757

Choung, H., David, P., & Ross, A. (2023). Trust in AI and its role in the acceptance of AI technologies.International Journal of Human–Computer Interaction, 39(9), 1727–1739.https://doi.org/10.1080/10447318.2022.2050543

Cohen, J. (1988).Statistical Power Analysis for the Behavioral Sciences.Routledge.

Craig, K., Thatcher, J., & Grover, V. (2019). The IT identity threat: A conceptual definition and operational measure.Journal of Management Information Systems, 36(1), 259–288.https://doi.org/10.1080/07421222.2018.1550561

Creswell, J. W., & Creswell, J. D. (2018).Research design: Qualitative, quantitative, and mixed method approaches(5th ed.). SAGE Publications, Inc.

Dolenc, K., & Brumen, M. (2024). Exploring social and computer science students’ perceptions of AI integration in (foreign) language instruction.Computers and Education: Artificial Intelligence, 7, Article 100285.https://doi.org/10.1016/j.caeai.2024.100285

Doroudi, S. (2023). The Intertwined Histories of Artificial Intelligence and Education.International Journal of Artificial Intelligence in Education, 33, 885–928.https://doi.org/10.1007/s40593-022-00313-2

Ebadi, S., & Raygan, A. (2023). Investigating the facilitating conditions, perceived ease of use and usefulness of mobile-assisted language learning. Smart Learning Environments, 10, Article 30.https://doi.org/10.1186/s40561-023-00250-0

Elchaghaby, M., & Wahby, R. (2025). Knowledge, attitudes, and perceptions of a group of Egyptian dental students toward artificial intelligence: A cross-sectional study.BMC Oral Health, 25, Article 11.https://doi.org/10.1186/s12903-024-05282-7

Elhassan, S. E., Sajid, M. R., Syed, A. M., Fathima, S. A., Khan, B. S., & Tamim, H. (2025). Assessing familiarity, usage patterns, and attitudes of medical students toward ChatGPT and other chat-based AI apps in medical education: Cross-sectional questionnaire study.JMIR Medical Education, 11, Article e63065.https://doi.org/10.2196/63065

Er, H. M., Nadarajah, V. D., Chen, Y. S., Misra, S., Perera, J., Ravindranath, S., & Hla,Y. Y. (2021). Twelve tips for institutional approach to outcome-based education in health professions programmes.MedicalTeacher, 43(sup1),S12–S17.https://doi.org/10.1080/0142159X.2019.1659942

Espartinez, A. S. (2024). Exploring student and teacher perceptions of ChatGPT use in higher education: A Q-methodology study.Computers and Education: Artificial Intelligence, 7, Article 100264.https://doi.org/10.1016/j.caeai.2024.100264

Essien, A., Salami, A., Ajala, O., Adebisi, B., Shodiya, A., & Essien, G. (2024). Exploring socio-cultural influences on generative AI engagement in Nigerian higher education: An activity theory analysis.Smart Learning Environments,11, Article 63.https://doi.org/10.1186/s40561-024-00352-3

Fan, J., & Zhang, Q. (2024). From literacy to learning: The sequential mediation of attitudes and enjoyment in AI-assisted EFL education.Heliyon, 10(17), Article e37158.https://doi.org/10.1016/j.heliyon.2024.e37158

Fitzek, S., & Choi, K.-E. A. (2024). Shaping future practices: German-speaking medical and dental students’ perceptions of artificial intelligence in healthcare.BMC Medical Education, 24, Article 844.https://doi.org/10.1186/s12909-024-05826-z

Ghotbi, N., & Ho, M. T. (2021). Moral awareness of college students regarding artificial intelligence.Asian Bioethics Review, 13, 421–433.https://doi.org/10.1007/s41649-021-00182-2

Habibi, A., Mukminin, A., Octavia, A., Wahyuni, S., Danibao, B. K., & Wibowo, Y. G. (2024). ChatGPT acceptance and use through UTAUT and TPB: A big survey in five Indonesian universities.Social Sciences & Humanities Open, 10, Article 01136.https://doi.org/10.1016/j.ssaho.2024.101136

Han, B., Nawaz, S., Buchanan, G., & McKay, D. (2023). Ethical and pedagogical impacts of AI in education. In N. Wang, G. Rebolledo-Mendez, N. Matsuda, O. C. Santos, & V. Dimitrova (Eds.), Lecture notes in computer science (pp. 1–7). Springer.https://doi.org/10.1007/978-3-031-36272-9_54

Hatem, N. A. H., Ibrahim, M. I. M., & Yousuf, S. A. (2024). Assessing Yemeni university students’ public perceptions toward the use of artificial intelligence in healthcare.Scientific Reports, 14, Article 28299.https://doi.org/10.1038/s41598-024-80203-w

Hornberger, M., Bewersdorff, A., Schiff, D., & Nerdel, C. (2025). A multinational assessment of AI literacy among university students in Germany, the UK, and the US.Computers in Human Behavior: Artificial Humans, 7, Article 100132.https://doi.org/10.1016/j.chbah.2025.100132

Ipek, Z. H., Gözüm, A. İ. C., Papadakis, S., & Kallogiannakis, M. (2023). Educational applications of the ChatGPT AIsystem: A systematic review research.Educational Process: International Journal, 12(3), 26–55.https://doi.org/10.22521/edupij.2023.123.2

Ivanov, S., Soliman, M., Tuomi, A., Alkathiri, N. A., & Al-Alawi, A. N. (2024). Drivers of generative AI adoption in higher education through the lens of the Theory of Planned Behaviour.Technology in Society, 77, Article 102521.https://doi.org/10.1016/j.techsoc.2024.102521

Jiang, Q., Zhang, Y., Wei, W., & Gu, C. (2024). Evaluating technological and instructional factors influencing the acceptance of AIGC-assisted design courses. Computers and Education: Artificial Intelligence, 7, Article 100287.https://doi.org/10.1016/j.caeai.2024.100287

Kalinichenko, N. S., & Velichkovsky, B. B. (2022).Феномен принятия информационных технологий: современное состояние и направления дальнейших исследований [TheTechnologyAcceptancephenomenon:currentstateandfutureresearch].OrganizationalPsychology/Организационная психология, 12(1), 128–152.https://doi.org/10.17323/2312-5942-2022-12-1-128-152

Kaya,F.,Aydin,F.,Schepman,A.,Rodway,P.,Yetişensoy,O., &DemirKaya,M. (2024).The roles of personality traits, AI anxiety, and demographic factors in attitudes toward artificial intelligence.International Journal of Human–Computer Interaction, 40(2), 497–514.https://doi.org/10.1080/10447318.2022.2151730

Kelly, S., Kaye, S.-A., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review.Telematics and Informatics, 77, Article 101925.https://doi.org/10.1016/j.tele.2022.101925

Keskin, H. K., Bastug, M., & Atmaca, T. (2016). Factors directing students to academic digital reading.Education and Science, 41(188), 117–129.https://doi.org/10.15390/EB.2016.6655

Kharroubi, S. A., Tannir, I., Abu El Hassan, R., & Ballout, R. (2024). Knowledge, attitude, and practices toward artificial intelligence among university students in Lebanon.Education Sciences, 14(8),Article 863.https://doi.org/10.3390/educsci14080863

Kim, J., Klopfer, M., Grohs, J. R., Eldardiry, H., Weichert, J., Cox, L. A., II, & Pike, D. (2025). Examining faculty and student perceptions of generative AI in university courses.Innovative Higher Education. Advance online publication.https://doi.org/10.1007/s10755-024-09774-w

Kostikova, L. P., Yesenina, N. E., & Olkov, A. S. (2025). Искусственный интеллект в образовательном процессе современного университета [Artificial intelligence in the educational process of a modern university].Концепт, 2,93-109.http://e-koncept.ru/2025/251017.htm

Kozak, J., & Fel, S. (2024). How sociodemographic factors relate to trust in artificial intelligence among students in Poland and the United Kingdom.Scientific Reports, 14, Article 28776.https://doi.org/10.1038/s41598-024-80305-5

Kwak, Y., Ahn, J.-W., & Seo, Y. H. (2022). Influence of AI ethics awareness, attitude, anxiety, and self-efficacy on nursing students’ behavioral intentions.BMC Nursing, 21, Article 267.https://doi.org/10.1186/s12912-022-01048-0

Lai, C. Y., Cheung, K. Y., & Chan, C. S. (2023). Exploring the role of intrinsic motivation in ChatGPT adoption to support active learning: An extension of the technology acceptance model.Computers and Education: Artificial Intelligence, 5, Article 100178.https://doi.org/10.1016/j.caeai.2023.100178

Li, W., Sun, K., Schaub, F., & Brooks, C. (2022). Disparities in students’ propensity to consent to learning analytics. International Journal of Artificial Intelligence in Education, 32, 564–608.https://doi.org/10.1007/s40593-021-00254-2

Lin, Y., & Yu, Z. (2023). Extending Technology Acceptance Model to higher-education students’ use of digital academic reading tools on computers.International Journal of Educational Technology in Higher Education, 20, Article 34.https://doi.org/10.1186/s41239-023-00403-8

Liu, D. S., Sawyer, J., Luna, A., Aoun, J., Wang, J., Boachie, L., Halabi, S., & Joe, B. (2022). Perceptions of US medical students on artificial intelligence in medicine: Mixed methods survey study.JMIR Medical Education, 8(4), Article e38325.https://doi.org/10.2196/38325

Lund, B. D., Mannuru, N. R., & Agbaji, D. (2024). AI anxiety and fear: A look at perspectives of information science students and professionals towards artificial intelligence.Journal of Information Science. Advance online publication.https://doi.org/10.1177/01655515241282001

Mahmood, A., Sarwar, Q., & Gordon, C. (2022). A Systematic Review on Artificial Intelligence in Education (AIE) with a focus on Ethics and Ethical Constraints.Pakistan Journal of Multidisciplinary Research, 3(1), 79-92.https://pjmr.org/pjmr/article/view/245

Mamun, A. A., Hossain, A., Salehin, S., Khan, S. H., & Hasan, M. (2022). Engineering students’ readiness for online learning amidst the COVID-19 pandemic.Educational Technology & Society, 25(3), 30–45.https://doi.org/10.21203/RS.3.RS-374991/V1

Marlina, E., Tjahjadi, B., & Ningsih, S. (2021). Factors affecting student performance in e-learning: A case study of higher educational institutions in Indonesia.Journal of Asian Finance, Economics and Business, 8(4), 993–1001.https://doi.org/10.13106/jafeb.2021.vol8.no4.0993

McHugh, M. L. (2012). Interrater reliability: The kappa statistic.Biochemia Medica, 22(3), 276-282.https://doi.org/10.11613/BM.2012.031

Mogavi, R. H., Deng, C., Kim, J. J., Zhou, P., Kwon, Y. D., Metwally, A. H. S., Tlili, A., Bassanelli, S., Bucchiarone, A., Gujar, S., Nacke, L. E., & Hui, P. (2024). ChatGPT in education: A blessing or a curse? A qualitative study exploring early adopters’ utilization and perceptions.Computers in Human Behavior: Artificial Humans, 2(1), Article 100027.https://doi.org/10.1016/j.chbah.2023.100027

Montag, C., Kraus, J., Baumann, M., & Rozgonjuk, D. (2023). The propensity to trust in (automated) technology mediates the links between technology self-efficacy and fear and acceptance of artificial intelligence. Computers in Human Behavior Reports, 11, Article 100315.https://doi.org/10.1016/j.chbr.2023.100315

Mustofa, R. H., Kuncoro, T. G., Atmono, D., Hermawan, H. D., & Sukirman. (2025). Extending the technology acceptance model: The role of subjective norms, ethics, and trust in AI tool adoption among students.Computers and Education: Artificial Intelligence, 8, Article 100379.https://doi.org/10.1016/j.caeai.2025.100379

Mwase, N. S., Patrick, S. M., Wolvaardt, J., Van Wyk, M., Junger, W., & Wichmann, J. (2023). Public health practice and artificial intelligence: Views of future professionals.Journal of Public Health, 33, 1481-1489.https://doi.org/10.1007/s10389-023-02127-5

Nazaretsky, T., Mejia-Domenzain, P., Swamy, V., Frej, J., & Käser, T. (2025). The critical role of trust in adopting AI-powered educational technology for learning: An instrument for measuring student perceptions.Computers and Education: Artificial Intelligence, 8, Article 100368.https://doi.org/10.1016/j.caeai.2025.100368

Nguyen, A., Kremantzis, M., Essien, A., Petrounias, I., & Hosseini, S. (2024). Enhancing student engagement through artificial intelligence (AI): Understanding the basics, opportunities, and challenges.Journal of University Teaching and Learning Practice, 21(6).https://doi.org/10.53761/caraaq92

Nikolopoulou, K., Gialamas, V., & Lavidas, K. (2021). Habit, hedonic motivation, performance expectancy and technological pedagogical knowledge affect teachers’ intention to use mobile internet. Computers and Education Open, 2, Article 100041.https://doi.org/10.1016/J.CAEO.2021.100041

Ouyang, F., Zheng, L., & Jiao, P. (2022). Artificial intelligence in online higher education: A systematic review of empirical research from 2011 to 2020.Education and Information Technologies, 27, 7893–7925.https://doi.org/10.1007/s10639-022-10925-9

Ozbey, F., & Yasa, Y. (2025). The relationships of personality traits on perceptions and attitudes of dentistry students towards AI.BMC Medical Education, 25, Article 26.https://doi.org/10.1186/s12909-024-06630-5

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hofmann, T. C., Mulrow, C. D., & Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews.BMJ, 372, Articlen71.https://doi.org/10.1136/bmj.n71

Perrotta, C., & Selwyn, N. (2020). Deep learning goes to school: Toward a relational understanding of AI in education. Learning, Media and Technology, 45(3), 251–269.https://doi.org/10.1080/17439884.2020.1686017

Preiksaitis, C., & Rose, C. (2023). Opportunities, challenges, and future directions of generative artificial intelligence in medical education: Scoping review.JMIR Medical Education, 9, Article e48785.https://doi.org/10.2196/48785

Qu, Y., Tan, M. X. Y., & Wang, J. (2024). Disciplinary differences in undergraduate students’ engagement with generative artificial intelligence.Smart Learning Environments, 11,Article51.https://doi.org/10.1186/s40561-024-00341-6

Ravšelj, D., Keržič, D., Tomaževič, N., Umek, L., Brezovar, N., A Iahad, N., Abdulla, A. A, Akopyan, A., Aldana Segura, M.W., Al Humaid, J., Allam, M. F., Alló, M., Andoh, R. P. K., Andronic, O., Arthur, Y. D., Aydın, F., Badran, A., Balbontín-Alvarado, R., Ben Saad, H, Aristovnik, A. (2025). Higher education students’ perceptions of ChatGPT: A global study of early reactions.PLOS One, 20(2), Articlee0315011.https://doi.org/10.1371/journal.pone.0315011

Rjoop, A., Al-Qudah, M., Alkhasawneh, R., Bataineh, N., Abdaljaleel, M., Rjoub, M. A., Alkhateeb, M., Abdelraheem, M., Al-Omari, S., Bani-Mari, O., Alkabalan, A., Altulaih, S., Rjoub, I., & Alshimi, R. (2025). Awareness and attitude toward artificial intelligence among medical students and pathology trainees: Survey study.JMIR Medical Education, 11, Article e62669.https://doi.org/10.2196/62669

Sabraz Nawaz, S., Fathima Sanjeetha, M. B., Al Murshidi, G., Mohamed Riyath, M. I., Mat Yamin, F. B., & Mohamed, R. (2024). Acceptance of ChatGPT by undergraduates in Sri Lanka: a hybrid approach of SEM-ANN.Interactive Technology and Smart Education, 21(4), 546–570.https://doi.org/10.1108/ITSE-11-2023-0227

Sadiq, S., Kaiwei, J., Aman, I., & Mansab, M. (2025). Examine the factors influencing the behavioral intention to use social commerce adoption and the role of AI in SC adoption. European Research on Management and Business Economics, 31(1), Article 100268.https://doi.org/10.1016/j.iedeen.2024.100268

Salas-Pilco, S. Z., & Yang, Y. (2022). Artificial intelligence applications in Latin American higher education: A systematic review.International Journal of Educational Technology in Higher Education, 19, Article 21.https://doi.org/10.1186/s41239-022-00326-w

Salifu, I., Arthur, F., Arkorful, V., Abam Nortey, S., & Solomon Osei-Yaw, R. (2024). Economics students’ behavioural intention and usage of ChatGPT in higher education: A hybrid structural equation modelling-artificial neural network approach.Cogent Social Sciences, 10(1), Article 2300177.https://doi.org/10.1080/23311886.2023.2300177

Sallam, M., Salim, N. A., Barakat, M., Al-Mahzoum, K., Al-Tammemi, A. B., Malaeb, D., Hallit, R., & Hallit, S. (2023). Assessing health students' attitudes and usage of ChatGPT in Jordan: Validation study.JMIR Medical Education,9,Article e48254.https://doi.org/10.2196/48254

Shahzad, M. F., Xu, S., & Javed, I. (2024). ChatGPT awareness, acceptance, and adoption in higher education: The role of trust as a cornerstone.International Journal of Educational Technology in Higher Education, 21,Article 46.https://doi.org/10.1186/s41239-024-00478-x

Shuhaiber, A., Kuhail, M. A., & Salman, S. (2025). ChatGPT in higher education: A student’s perspective. Computers in Human Behavior Reports, 17, Article 100565.https://doi.org/10.1016/j.chbr.2024.100565

Sindermann, C., Sha, P., Zhou, M., Wernicke, J., Schmitt, H. S., Li, M., Sariyska, R., Stavrou, M., Becker, B., & Montag, C. (2021). Assessing the attitude towards artificial intelligence: Introduction of a short measure in German, Chinese, and English language.KI - Künstliche Intelligenz, 35, 109–118.https://doi.org/10.1007/s13218-020-00689-0

Solórzano Solórzano, S. S., Pizarro Romero, J. M., Díaz Cueva, J. G., Arias Montero, J. E., Zamora Campoverde, M. A., Lozzelli Valarezo, M. M., Montes Ninaquispe, J. C., Acosta Enriquez, B. G., & Arbulú Ballesteros, M. A. (2024). Acceptance of artificial intelligence and its effect on entrepreneurial intention in foreign trade students: A mirror analysis.Journal of Innovation and Entrepreneurship, 13,Article59.https://doi.org/10.1186/s13731-024-00412-5

Song, D. (2024, December 5).How learners’ trust changes in generative AI over a semester of undergraduate courses.Research Square.https://doi.org/10.21203/rs.3.rs-4433522/v1

Stein, J.-P., Messingschlager, T., Gnambs, T., Hutmacher, F., & Appel, M. (2024). Attitudes towards AI: Measurement and associations with personality.Scientific Reports, 14, Article 2909.https://doi.org/10.1038/s41598-024-53335-2

Stöhr, C., Ou, A. W., & Malmström, H. (2024). Perceptions and usage of AI chatbots among students in higher education across genders, academic levels, and fields of study.Computers and Education: Artificial Intelligence, 7, Article 100259.https://doi.org/10.1016/j.caeai.2024.100259

Tao, W., Yang, J., & Qu, X. (2024). Utilization of, perceptions on, and intention to use AI chatbots among medical students in China: National cross-sectional study.JMIR Medical Education, 10, Article e57132.https://doi.org/10.2196/57132

Truong, N. M., Vo, T. Q., Tran, H. T. B., Nguyen, H. T., & Pham, V. N. H. (2023). Healthcare students’ knowledge, attitudes, and perspectives toward artificial intelligence in the Southern Vietnam.Heliyon, 9(12),Article e22653.https://doi.org/10.1016/j.heliyon.2023.e22653

Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education.International Journal of Educational Technology in Higher Education, 21, Article 15.https://doi.org/10.1186/s41239-024-00448-3

Waluyo, B., & Kusumastuti, S. (2024). Generative AI in student English learning in Thai higher education: More engagement, better outcomes?Social Sciences & Humanities Open, 10, Article 101146.https://doi.org/10.1016/j.ssaho.2024.101146

Wang, Y., Liu, C., & Tu, Y. F. (2021). Factors affecting the adoption of AI based applications in higher education: an analysis of teachers’ perspectives using structural equation modeling.Educational Technology & Society, 24(3), 116–129.https://www.jstor.org/stable/27032860

Wang, Y., & Zhang, W. (2023). Factors influencing the adoption of generative AI for art designing among Chinese Generation Z: A structural equation modeling approach.IEEE Access, 11,143272–143284.https://doi.org/10.1109/ACCESS.2023.3342055

Xu, X., Su, Y., Zhang, Y., Wu, Y., & Xu, X. (2024). Understanding learners’ perceptions of ChatGPT: A thematic analysis of peer interviews among undergraduates and postgraduates in China.Heliyon, 10(4), Article e26239.https://doi.org/10.1016/j.heliyon.2024.e26239

Yeh, S.-C., Wu, A.-W., Yu, H.-C., Wu, H. C., Kuo, Y.-P., & Chen, P.-X. (2021). Public perception of artificial intelligence and its connections to the Sustainable Development Goals.Sustainability, 13(16), Article 9165.https://doi.org/10.3390/su13169165

Yigitcanlar, T., Degirmenci, K., & Inkinen, T. (2024). Drivers behind the public perception of artificial intelligence: Insights from major Australian cities.AI & Society, 39, 833–853.https://doi.org/10.1007/s00146-022-01566-0

Yusuf, A., Pervin, N., & Román-González, M. (2024). Generative AI and the future of higher education: A threat to academic integrity or reformation? Evidence from multicultural perspectives.International Journal of Educational Technology in Higher Education, 21,Article 21.https://doi.org/10.1186/s41239-024-00453-6

Zhao, W., Hu, F., Wang, J., Shu, T., & Xu, Y. (2023). A systematic literature review on social commerce: Assessing the past and guiding the future.Electronic commerce research and applications, 57, Article 101219.https://doi.org/10.1016/j.elerap.2022.101219