Introduction

The rapid advancement of artificial intelligence (AI) has profoundly transformed various sectors, including higher education, by reshaping teaching and learning paradigms. AI-driven technologies enable personalized and adaptive learning experiences, allowing students to tailor their learning strategies and progress at an individualized pace (Chadha, 2024; Tulcanaza-Prieto et al., 2023; Vishwanathaiah et al., 2023). The integration of AI into educational environments, however, necessitates the redesign of pedagogical frameworks to ensure that technology supports—not supplants—the learning process (Baskara, 2023; Imran et al., 2024). Through adaptive learning systems and intelligent tutoring applications, AI enhances educational accessibility and promotes self-directed learning (Hongli & Leong, 2024; Wei, 2023). Moreover, generative AI tools contribute to the creation of dynamic, interactive learning environments that foster exploration, problem-solving, and collaborative knowledge construction (Moulin, 2024).

Despite the significant advantages brought by artificial intelligence (AI) in higher education, increasing concern has arisen over students’ growing dependence on AI tools, which may impede the development of higher-order cognitive skills such as critical thinking and reasoning (Imran et al., 2024; Walter, 2024). The convenience and immediacy of AI-generated outputs often discourage students from engaging in deeper analytical exploration, thereby limiting opportunities for independent intellectual inquiry (Luo, 2024; Walter, 2024). This dependency presents a crucial pedagogical dilemma for universities striving to integrate technology effectively while preserving students’ capacity for autonomous reasoning and reflective judgment. Addressing this challenge requires a deliberate balance between leveraging AI for learning efficiency and ensuring that it remains a catalyst for—not a substitute for—cognitive development.

In this context, strengthening students’ critical thinking skills becomes increasingly vital to maintain intellectual autonomy in the era of pervasive AI use. Critical thinking is a foundational competency in higher education, encompassing the ability to analyze, interpret, synthesize, evaluate, infer, and self-regulate to make sound judgments and solve complex problems (Facione, 20 23; Paul & Elder, 2019). Although AI enhances academic productivity by efficiently processing and generating vast amounts of information, it does not inherently cultivate the reflective, evaluative, and metacognitive processes essential to critical reasoning (Fan et al., 2025; Gerlich, 2025). Since AI systems produce responses based on data-driven patterns rather than authentic human reasoning, they may inadvertently constrain students’ ability to engage with uncertainty, evaluate multiple perspectives, and construct independent analytical judgments (Zhai et al., 2024). These cognitive limitations are further compounded by ethical concerns—such as academic integrity, algorithmic bias, and the authenticity of AI-generated content—which complicate the pedagogical role of AI in higher education (Amirjalili et al., 2024; Barua, 2024). Without purposeful instructional design and guided reflection, AI risks functioning as a cognitive shortcut that replaces genuine intellectual effort rather than fostering critical inquiry and thoughtful engagement.

Several studies have investigated the role of AI in higher education and its implications for the development of critical thinking skills. For instance,Kizilcec et al. (2024) highlighted the influence of generative AI tools on academic practices, particularly the risk of academic dishonesty, which may undermine efforts to cultivate critical thinking. Similarly,Sarwanti et al. (2024) examined students’ perceptions and experiences with ChatGPT, revealing that over-reliance on AI tools can create substantial barriers to the development of independent analytical skills.Dergaa et al. (2023) emphasized both the potential benefits and ethical risks of natural language processing (NLP) technologies such as ChatGPT in academic writing, underscoring the necessity of preserving human critical reasoning in educational contexts. In addition,Murtiningsih et al. (2024) pointed out practical challenges associated with AI use in higher education, noting a decline in students’ reflective and analytical capabilities when dependence on AI becomes excessive.

Although these studies provide valuable insights into the impact, risks, and perceptions related to AI use in higher education, a research gap persists. Most prior research has primarily focused on students’ experiences, the risks of AI dependency, and ethical considerations, leaving limited understanding of how critical thinking itself is conceptualized, structured, and strategically fostered in the AI era. To address this gap, the present study adopts a combined bibliometric and systematic literature review (SLR) approach to comprehensively map the research landscape of critical thinking in higher education amid AI integration. Specifically, this study aims to identify the core constructs of critical thinking emerging from existing literature and to propose strategic approaches for its development in the AI era. By doing so, it contributes to a more nuanced understanding of how AI can be effectively leveraged to support the cultivation of critical thinking within higher education frameworks. Guided by this objective, three primary research questions structure this investigation:

1.What is the current mapping of publications on critical thinking in higher education in the AI era?

2.Which constructs of critical thinking in the AI era have been identified by researchers?

3.What strategies are recommended for fostering critical thinking in higher education amid the growing influence of AI?

Methodology

Research Design

This study employs a quantitative bibliometric analysis approach and a qualitative systematic literature review. The bibliometric analysis maps the current publication landscape, while the systematic literature review explores strategies for developing critical thinking in higher education in the AI era. The Scopus database, recognized as one of the most reliable and widely used sources of academic information, serves as the primary source for this research. However, using a single database introduces biases that limit the appearance of similar documents in other sources. Therefore, this limitation is stated in the conclusion section, and the justification for the findings is specific to the Scopus database.

Data Collection

The bibliometric investigation followed five main stages: research design, data collection, data analysis, data visualization, and data interpretation (Salido et al., 2024; Zupic & Čater, 201 4).The study design included establishing the theme of critical thinking in the era of AI in higher education as the research area, and Scopus as the primary study database. Scopus was chosen due to its reliability in providing comprehensive bibliographic metadata and as a source known for providing reputable international journals (Nasrum et al., 2025).Data collection was conducted on February 18, 2025, using the search query: “critical thinking” AND (“Artificial Intelligence” OR AI) AND (“higher education” OR university OR college OR institute OR campus). The search query was developed considering related keywords and validated by two additional researchers. The search yielded 322 documents published between 2022 and 2024, limited to articles, conference papers, and reviews. The starting year of 2022 was chosen to correspond with the introduction of AI chatbots in higher education (McGrath et al., 202 5; Neumann et al., 2023).Restricting document types aligns with the study's objective of mapping publications related to research activities. All relevant bibliographic metadata were exported in Comma-Separated Values (CSV) format to ensure compatibility with Biblioshiny R and VOSviewer.

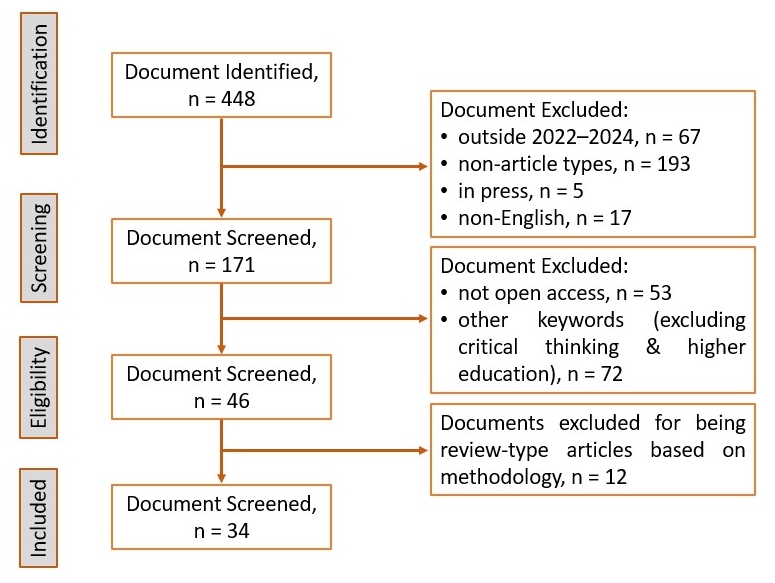

Simultaneously, a systematic literature review was conducted following the PRISMA guidelines, which include four stages: identification, screening, eligibility, and inclusion (Moher et al., 201 5), as illustrated in Figure 1. Initially, 448 publications on critical thinking in the AI era in higher education were identified in the Scopus database. During the initial screening, 282 documents were excluded because they were published before 2022, were not original research, had not reached final publication, or were not in English. This criterion ensured that the review focused on original, fully published research conducted in the period reflecting the emergence of AI chatbots such as ChatGPT and published in widely accessible languages. Further filtering excluded 125 documents due to closed access or the absence of keywords relevant to the study topic. Finally, 12 documents were removed after full-text review, as they were opinion pieces, reviews, or editorials, despite being categorized as original articles in Scopus. This multi-layered filtering process resulted in 34 documents included as the primary dataset for analysis.

Figure 1. PRISMA Flow Chart

Data Analysis

Bibliometric analysis was performed using Biblioshiny R and VOSviewer. Prior to analysis, metadata were cleaned using OpenRefine to remove inconsistencies and duplicate terms. Additional cleaning was performed using a thesaurus during mapping in VOSviewer. Data visualization was applied throughout the analysis process, and outputs relevant to the study objectives were selected for presentation. The visualizations were interpreted by the authors and validated by three additional researchers with expertise in the respective fields.

For the systematic literature review, the 34 selected documents were examined and summarized using a deductive thematic analysis approach (Nowell et al., 2017). This process involved identifying and categorizing key themes to address the research questions effectively. The analysis focused on two main areas: (1) the constructs of critical thinking in the AI era and (2) strategies for developing critical thinking suggested by previous studies. The thematic findings were validated by three co-authors, who also participated in the bibliometric validation, and the results presented reflect a consensus reached through discussion among all validators.

Findings

The findings of this study cover two main aspects: (1) an overview of the landscape and mapping of publications on critical thinking in the era of AI in higher education, and (2) a synthesis of research on the integration of AI in the development of critical thinking in higher education. The landscape analysis and mapping of publications include the identification of key journal sources, globally impactful documents, impactful authors, current key research themes, and emerging themes with potential for future exploration. Meanwhile, the synthesis of research findings presents an overview of the conceptualization and construct of critical thinking in the era of AI, and strategies for developing critical thinking in the era of AI are suggested by previous researchers. These findings provide a broad analytical perspective, offering critical insights related to the research themes.

Landscape and Mapping of Publications on Critical Thinking in Higher Education in the Era of AI

This study identified the ten most relevant journal sources that significantly contribute to the development of AI-integrated critical thinking in higher education. As illustrated in Table 1, these sources were identified based on the number of papers they published between 2022 and 2024.

Table 1. Top Ten Most Relevant Sources

| Source | Documents | Citations |

| Cogent education | 8 | 146 |

| Education sciences | 8 | 894 |

| Journal of applied learning and teaching | 8 | 67 |

| ASEE annual conference and exposition, conference proceedings | 7 | 10 |

| Communications in computer and information science | 7 | 73 |

| Frontiers in education | 7 | 101 |

| Lecture notes in networks and systems | 7 | 18 |

| Journal of information technology education: research | 6 | 86 |

| Education and information technologies | 5 | 443 |

| IEEE global engineering education conference, educon | 5 | 17 |

Table 1 reveals the ten most influential sources contributing to the field of artificial intelligence (AI) and critical thinking development in higher education. The analysis shows that Education Sciences and Cogent Education are the most productive outlets, each publishing eight papers on the topic, followed by The Journal of Applied Learning and Teaching with an equal number of publications. Other important contributors include the ASEE Annual Conference and Exposition Proceedings, Communications in Computer and Information Science (CCIS), Frontiers in Education, and Lecture Notes in Networks and Systems, each producing seven documents. Meanwhile, The Journal of Information Technology Education: Research, Education and Information Technologies, and IEEE EDUCON complete the top ten list with six and five publications, respectively. Despite similar productivity levels, citation patterns vary significantly—Education Sciences (894 citations) and Education and Information Technologies (443 citations) stand out as high-impact journals, indicating that the influence of publications is not always proportional to the number of documents produced. This divergence between productivity and impact aligns with previous bibliometric findings showing that open-access and well-indexed journals tend to accumulate more citations due to their broader visibility and interdisciplinary readership (Huang et al., 2024).

Furthermore, the dominance of Education Sciences and Education and Information Technologies underscores their pivotal role in integrating AI applications with critical thinking frameworks in higher education. These journals not only publish frequently but also attract substantial scholarly attention, reflecting their capacity to bridge discussions among technology, learning analytics, and higher-order thinking. In contrast, technically oriented venues or conference proceedings such as ASEE Proceedings and CCIS exhibit lower citation rates, likely due to their focus on emerging, short-cycle studies. This pattern reinforces the notion that peer-reviewed journals with established academic reputations function as long-term repositories of impactful research, whereas conference proceedings primarily serve as incubators for early-stage ideas (Franco, 2017; Kochetkov et al., 202 2). For future researchers exploring AI and critical thinking, these sources may serve as strategic publication targets. Subsequently, the results of the globally cited document analysis are presented in Table 2.

Table 2. Top Ten Most Global Cited Documents

| Paper | DOI | Total Citations |

| Dergaa et al. (2023), Biol. Sport | 10.5114/BIOLSPORT.2023.125623 | 474 |

| Michel-Villarreal et al. (2023), Educ. Sci. | 10.3390/educsci13090856 | 472 |

| Walter, 2024, Int. J. Educ. Technol. High. Educ. | 10.1186/s41239-024-00448-3 | 331 |

| Thornhill-Miller et al. (2023), J. Intell. | 10.3390/jintelligence11030054 | 312 |

| Chan and Lee (2023), Smart Learn. Environ. | 10.1186/s40561-023-00269-3 | 290 |

| Mohamed (2024), Educ. Inf. Technol. | 10.1007/s10639-023-11917-z | 232 |

| Nikolic et al. (2023), Eur. J. Eng. Educ. | 10.1080/03043797.2023.2213169 | 215 |

| Malik et al. (2023), Int. J. Educ. Res. Open | 10.1016/j.ijedro.2023.100296 | 203 |

| Lo (2023), J. Acad. Librariansh. | 10.1016/j.acalib.2023.102720 | 202 |

| van den Berg and du Plessis (2023), Educ. Sci. | 10.3390/educsci13100998 | 195 |

Table 2 presents the ten most globally cited documents in the field of artificial intelligence (AI) and critical thinking development in higher education, revealing clear disparities in scholarly influence among publications. The seminal work by Dergaa et al. (2023) received 474 citations, making it the most influential document within the dataset, followed closely by Michel-Villarreal et al. (2023) with 472 citations. Both publications substantially exceed the citation counts of other works, thus serving as pivotal references guiding subsequent research on AI-based educational innovation. Walter (2024) recorded 331 citations, Thornhill-Miller et al.(2023) obtained 312 citations, and Chan and Lee (2023) garnered 290 citations, indicating strong but secondary influence. Meanwhile, mid-range influential studies include Mohamed (2024) with 232 citations, Nikolic et al.(2023) with 215 citations, and Malik et al.(2023) with 203 citations, followed by Lo (2023) with 73 citations and van den Berg and du Plessis (2023) with 72 citations. This gradient of citation frequency reflects a citation concentration phenomenon, in which a small number of highly visible papers attract the majority of scholarly attention, shaping conceptual frameworks and methodological standards within the domain. Such citation asymmetry is characteristic of emerging interdisciplinary fields, where a few pioneering studies establish foundational directions for future inquiry and practice (Ke, 2020).

Several of the most-cited documents exemplify this phenomenon.Dergaa et al. (2023) explore the prospects and risks of generative AI tools such as ChatGPT in academic writing, emphasizing how these tools can enhance efficiency while simultaneously challenging the authenticity of critical reasoning. Meanwhile,Michel-Villarreal et al. (2023) adopt an ethnographic approach to examine ChatGPT’s role in higher education, highlighting the need for institutional policies, ethical frameworks, and the cultivation of reflective student engagement. The emergence of van den Berg and du Plessis’ work further signals that structural and curricular transformation, rather than mere tool adoption, lies at the core of this research domain. Collectively, these themes indicate a dominant research focus on the intersection between generative AI, academic integrity, and critical thinking development in higher education. For future researchers, engaging with these highly cited works provides both theoretical foundations and strategic guidance for defining research scope and selecting high-visibility publication venues aligned with these influential studies. Subsequently, the analysis of impactful authors is presented in Figure 2.

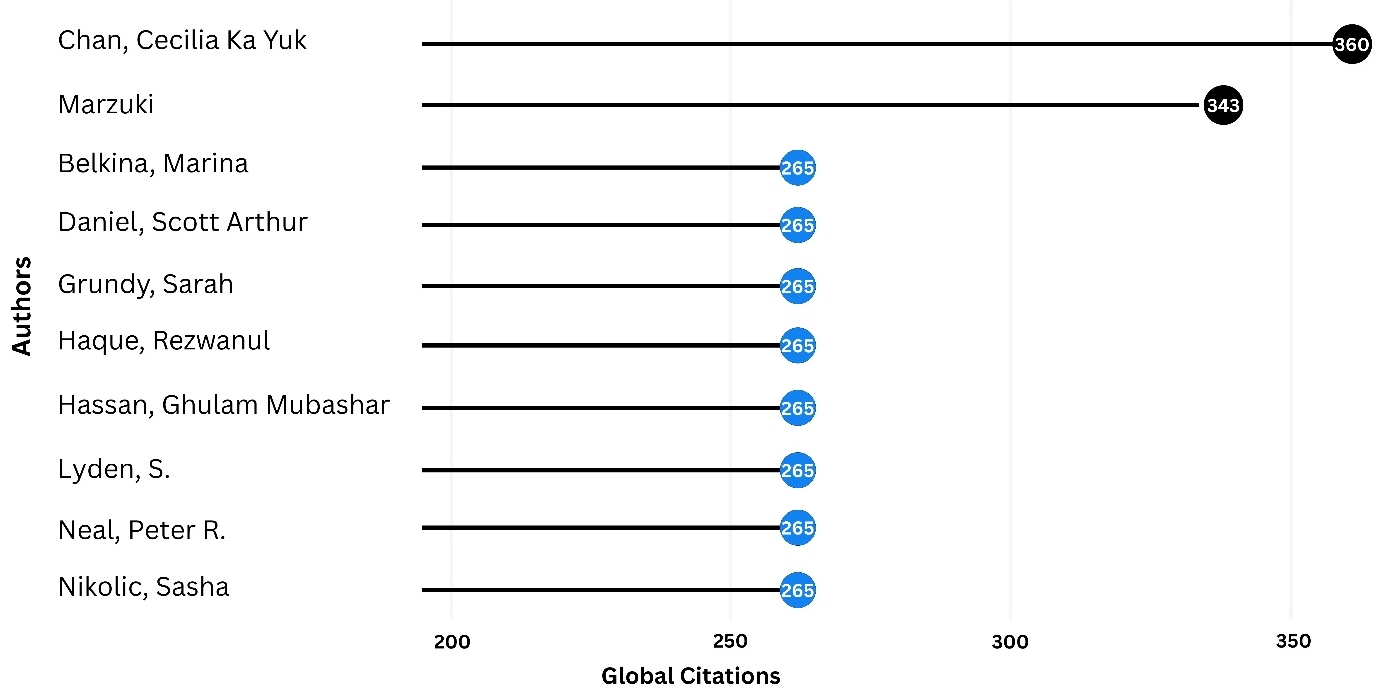

Figure 2. Top Ten Impactful Authors

Figure 2 identifies several influential authors in this publication network, with Chan leading the list with 360 global citations, followed by Marzuki with 343 citations. Meanwhile,Belkina, Daniel, Grundy, Haque, Hassan, Lyden, Neal, and Nikolic each received 265 citations, forming a cluster of mid-tier yet impactful contributors. Within this scope, Chan’s research focuses on pedagogical adaptation and generational differences in the adoption and use of AI in higher education (Chan & Lee, 2023). Her studies examine how factors such as technological readiness, digital confidence, and ethical perception influence the ability of educators and students to employ AI critically and responsibly. Her findings affirm that the implementation of generative AI in teaching should be accompanied by pedagogical innovation, ethical reflection, and professional development for educators to ensure that digital transformation does not erode authentic thinking and humanistic values in the learning process (Chan & Lee, 2023; Chan & Tsi, 2024).

Meanwhile, Marzuki contributes through research exploring students’ experiences and perceptions of AI use in academic activities, particularly in language-based learning and academic writing contexts (Malik et al., 2023). His studies provide empirical foundations for understanding how students’ interactions with AI affect cognitive processes, creativity, and critical reasoning, emphasizing the importance of balancing technological assistance with authenticity in learning (Darwin et al., 2024; Werdiningsih et al., 2024). In parallel, Nikolic and collaborators examine the implications of AI use for academic integrity and assessment systems in engineering and STEM education, identifying risks of misuse, policy gaps, and the need for institutional frameworks that ensure fair and ethical evaluation (Nikolic et al., 2023, 2024). Collectively, these three authors represent the three major axes of AI research in higher education: human-centered pedagogy (Chan & Lee, 2023; Chan & Tsi, 2024), authentic and reflective learning (Darwin et al., 2024; Malik et al., 2023; Werdiningsih et al., 2024), and integrity-driven assessment (Nikolic et al., 2023, 2024).

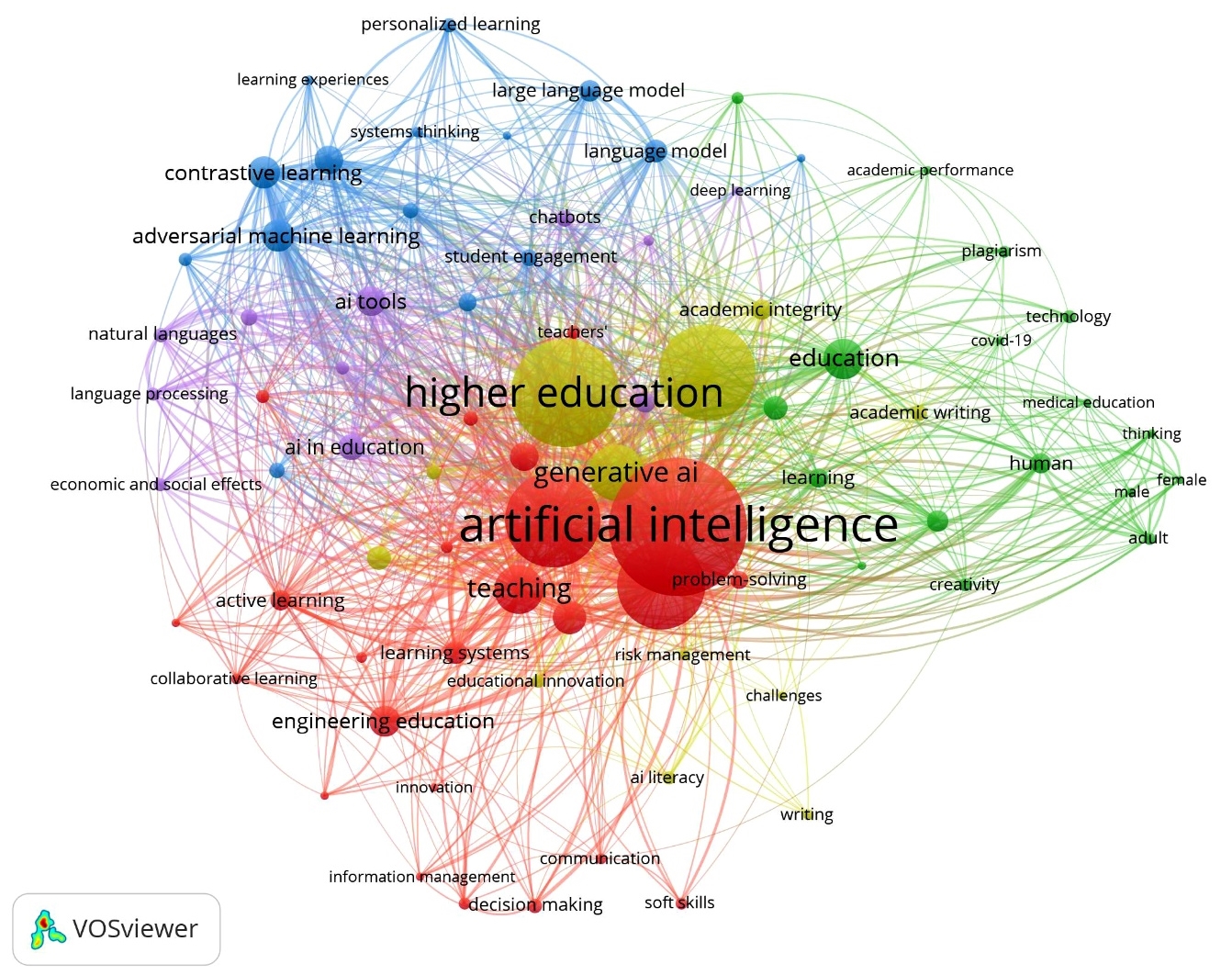

Figure 3. Keyword Network Visualization

Furthermore, the VOSviewer analysis, based on a minimum occurrence threshold of five keywords, identifies five interconnected clusters that collectively construct the intellectual structure of current research on artificial intelligence (AI) and critical thinking development in higher education, as presented in Figure 3. Cluster 1 (red), consisting of 24 items such as artificial intelligence, student, critical thinking, curriculum, teaching, pedagogy, teachers, active learning, learning systems, learning process, and problem-solving, represents the pedagogical and instructional dimensions of AI integration. Studies in this cluster emphasize how AI is integrated into curricula to promote higher-order thinking, problem-solving, and reflective learning practices. This finding aligns with some recent work, which observes that educational AI research is moving beyond tool adoption toward pedagogical redesign centered on metacognition and inquiry-based learning, and its integration with technology (Baskara, 2023; Imran et al., 2024). However, while these studies highlight the positive role of AI in fostering student engagement and personalized learning, other scholars—such as Khan et al. (202 4) and Ogunleye et al. (2024)—warn that without teacher preparedness and ethical guidance, such innovations may risk creating cognitive dependency or superficial understanding. Overall, this cluster highlights a dynamic tension between technological facilitation and the preservation of authentic critical thinking—a central theme also reflected in the preceding citation and authorship analyses.

Cluster 2 (green) and Cluster 4 (yellow) capture the ethical, evaluative, and institutional dimensions of AI in education. The green cluster, comprising 17 items including education, assessment, learning, e-learning, student learning, human, creativity, plagiarism, technology, adult, and thinking, reflects research exploring how AI reshapes academic evaluation and creative learning processes. Meanwhile, the yellow cluster, consisting of 13 items such as higher education, ChatGPT, generative ai, academic integrity, academic writing, university students, ai literacy, risk management, ethics, and challenges, captures the emerging discourse on generative AI and its implications for academic honesty. These themes correspond to studies emphasizing the necessity of ethical responsibility in the use of AI in higher education, including the development of institutional policies and adaptive evaluation guidelines suited for digital learning contexts (Atenas et al., 2023; Khan et al., 2024; Wang et al., 2024). The emphasis on integrity and authenticity aligns with the findings of Dergaa et al. (2023) and Michel-Villarreal et al. (2023), who argue that generative AI tools such as ChatGPT pose serious challenges to authorship authenticity and critical thinking assessment. Conversely, studies such as Walter (2024) and van den Berg and du Plessis (2023) highlight the positive opportunities offered by AI integration in promoting creativity and student engagement when supported by strong digital literacy and ethical policies. Together, these clusters indicate that global research directions are shifting from mere technological adoption toward approaches that balance digital innovation, academic honesty, and institutional accountability as foundational principles of educational transformation in the AI era. As Rane et al. (2024) point out, there is a growing need to design new academic policies to mitigate AI misuse while promoting ethical student practice.

Cluster 3 (blue) and Cluster 5 (purple) represent the technical and applicative dimensions of AI research in education. The blue cluster, which includes 15 items such as contrastive learning, adversarial machine learning, language model, large language model, student perspectives, federated learning, personalized learning, systems thinking, and data privacy, indicates increasing attention to the development of large language models (LLMs), federated learning, and adaptive systems that safeguard data privacy while supporting personalized learning. Meanwhile, the purple cluster,comprising 11 items such as ai tools, ai in education, ai chatbots, chatbots, deep learning, natural languages, language processing, dan educational technology, focuses on the practical application of AI technologies in learning contexts—for example, using chatbots as virtual tutors, teaching assistants, and language learning aids. This pattern aligns with studies suggesting that the focus of AI-based education research has shifted from algorithmic development toward more contextual, application-driven approaches aimed at improving learning quality (Esakkiammal & Kasturi, 2024; Guettala et al., 2024). Compared with previous findings, however, the present clustering results demonstrate a closer integration between technical and pedagogical discourse, where issues such as data privacy and academic integrity increasingly appear together in the same research discussions. This finding indicates that AI research in higher education is progressing toward a phase of conceptual consolidation, in which technological development, ethical policy, and pedagogical innovation are viewed as interdependent elements in shaping responsible learning practices in the AI era (Chaparro-Banegas et al., 2024; Chia et al., 2024; Malik et al., 2023).

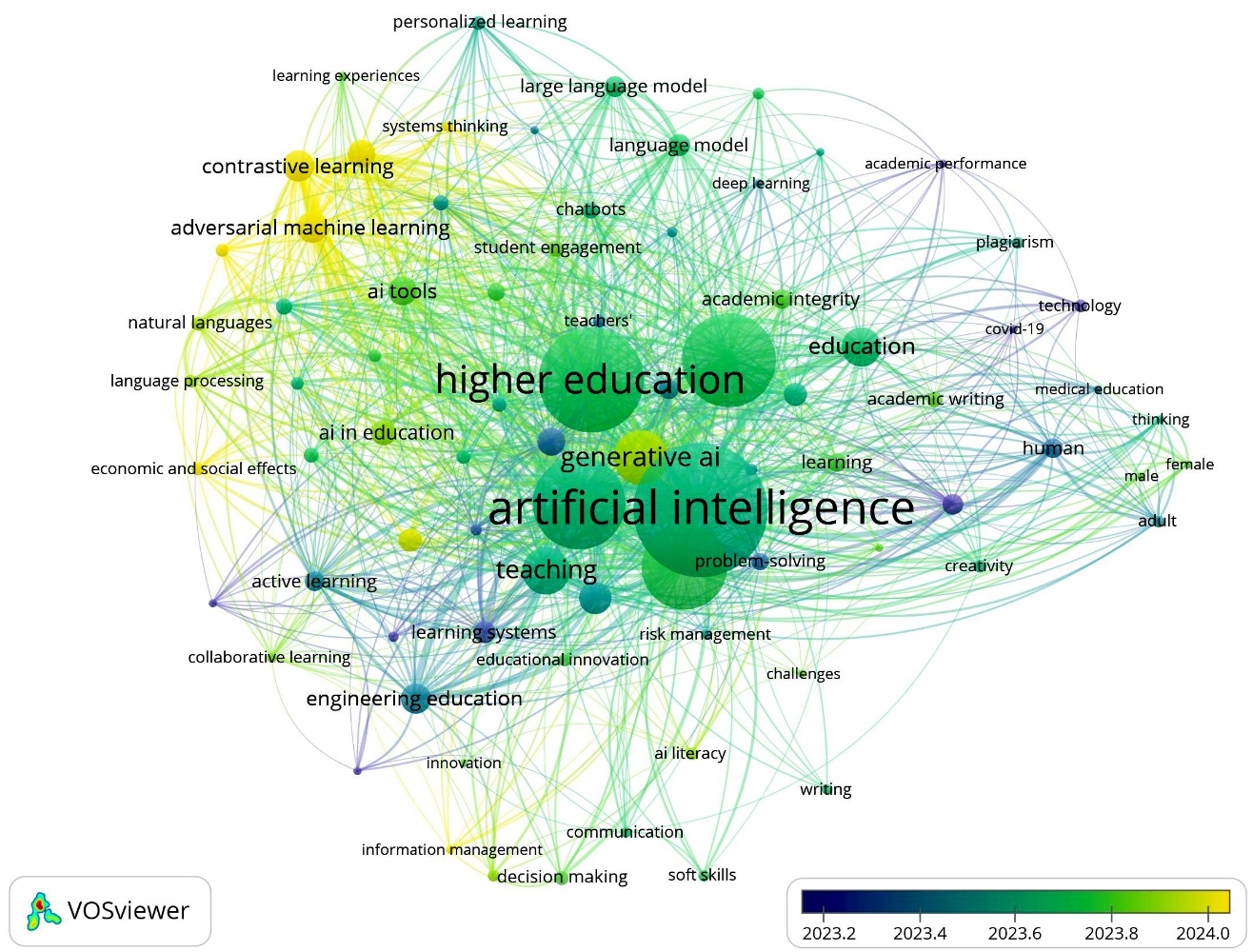

Figure 4. Keyword Overlay Visualization

Finally, the overlay visualization provides temporal evidence of how research on artificial intelligence (AI) and critical thinking in higher education has evolved from conceptual exploration toward systemic and multidisciplinary inquiry, as shown in Figure 4. The most recent keywords highlighted in yellow—including university students, information management, economic and social effects, generative adversarial network, contrastive learning, adversarial machine learning, federated learning, systems thinking, and generative AI—illustrate the rapid expansion of focus areas in recent years. These terms indicate that academic attention is shifting from pedagogical integration to technological sophistication, data governance, and socio-institutional accountability (Chan & Lee, 2023; Tarisayi, 2024). The growing prominence of federated learning and adversarial machine learning suggests increased concern for data privacy, model resilience, and fairness in AI-enabled education, reflecting global trends in responsible AI research (Moshawrab et al., 2023). Similarly, the emphasis on systems thinking and economic and social effects demonstrates emerging recognition that AI implementation in higher education cannot be separated from broader societal systems (Walter, 2024). This observation aligns with the discussions of Almaraz-López et al. (2023) and Chadha (2024), who noted that educational AI should be understood not merely as a pedagogical innovation but as a socio-technical ecosystem that reshapes institutional governance, labor structures, and learning equity.

In addition, the emergence of generative AI, information management, and university students in the latest research timeline indicates a shift toward human-centered and ethical AI research, emphasizing digital literacy, student agency, and institutional readiness. These developments echo the findings of Dergaa et al. (2023) and Walter (2024), who identified a growing tension between technological acceleration and the preservation of authenticity and academic integrity. The increasing focus on contrastive learning and generative adversarial networks (GANs) represents a deepening of technical sophistication in educational AI, where algorithms originally designed for creative generation and self-supervised learning are being adapted to enhance personalized and adaptive education. Such innovations reveal promising new research frontiers, including (1) ethical architectures for decentralized AI learning systems that preserve privacy and fairness; (2) AI-based cognitive analytics to monitor and enhance students’ critical thinking development; (3) integrative frameworks for systems thinking that connect technological, economic, and human dimensions of AI adoption; and (4) policies to manage the socio-economic impacts of generative AI on higher education labor and equity. Collectively, these trajectories highlight that the boundaries of AI-in-education research are maturing toward interdisciplinary synthesis—combining technical, ethical, and pedagogical expertise to ensure that future AI ecosystems in higher education remain inclusive, transparent, and human-centered (Bond et al., 2024; Jacques et al., 2024).

Concepts and Constructs of AI-Integrated Critical Thinking in Higher Education

Table 3 summarizes the results of the synthesis of 34 documents that discuss the conceptualization of critical thinking by the authors reviewed. This section also includes the construct of critical thinking integrated with AI in higher education.

Table 3. Constructs of Critical Thinking in the AI Era

| Author (Year) | Concept of Critical Thinking | Construction of Critical Thinking in the AI Era |

| Tsopra et al. (2023) | Digital health reasoning; analytical–clinical integration. | Project-based Artificial Intelligence–Clinical Decision Support System (AI-CDSS) design integrating clinical, technical, and ethical reasoning. |

| Malik et al. (2023) | Active, analytical, and constructive cognition. | Balanced human–AI interaction fostering evaluation and reflection. |

| Al Ka’bi (2023) | Not mentioned | AI as a support for analytical and creative thinking. |

| Michalon and Camacho-Zuñiga (2023) | Responsible and context-sensitive reasoning. | Verification of AI errors fostering rational skepticism. |

| Chia et al. (2024) | Evaluative reasoning within AI literacy. | Emphasis on ethical evaluation and information credibility. |

| Mirón-Mérida and García-García (2024) | One of the 4Cs: critical and creative thinking. | Reflective AI use through debates and active learning. |

| Atenas et al. (2023) | Interdisciplinary and reflective problem-solving. | Critical data literacy and ethical engagement with AI. |

| Bozkurt et al. (2024) | Evaluative and reflective capacity. | Self-regulation and ethical evaluation of AI outputs. |

| Crudele and Raffaghelli (2023) | Reasoning, reflection, and argumentation. | Argument mapping to enhance analytical reasoning. |

| Michel-Villarreal et al. (2023) | Reflective and evidence-based reasoning. | Human reflection balanced with AI assistance. |

| Räisä and Stocchetti (2024) | Epistemic awareness of knowledge formation. | Reflection on AI opacity and cognitive autonomy. |

| Quintero-Gámez et al. (2024) | Not mentioned | Not specified; AI as predictive analytical tool. |

| Klimova and de Campos, 2024) | Cognitive and ethical reasoning. | Information evaluation and prompt literacy. |

| Costa et al. (2024) | Higher-order cognitive and reflective skill. | Ethical use and verification of AI-generated information. |

| Valova et al. (2024) | Analytical, ethical, and epistemic competence. | Responsible AI use with ethical guidance. |

| Asamoah et al. (2024) | Evaluative, analytical and independent reasoning. | Domain Knowledge, Ethical Acumen, and Query Capabilities (DEQ) model: domain knowledge, ethics, and questioning. |

| Werdiningsih et al. (2024) | Evaluative and originality-preserving reasoning. | Ethical AI use under educator supervision. |

| Chaparro-Banegas et al. (2024) | Reflective and skill-based process. | Ethical and transparent AI integration. |

| Ruiz-Rojas et al. (2024) | Reflective analysis, evaluation, and autonomy. | Generative AI use enhancing creativity and reflection. |

| Darwin et al. (2024) | Skeptical, analytical, and rigorous reasoning. | Human oversight of AI to sustain critical inquiry. |

| Banihashem et al. (2024) | Contextual and reflective cognition. | Human–AI collaboration through critical prompting. |

| Wang et al. (2024) | Original, creative, and reflective authorship. | Evaluation and revision of AI outputs. |

| Zhou et al. (2024) | Analytical, inferential, and metacognitive reasoning. | Active AI engagement enhancing self-regulation. |

| Borkovska et al. (2024) | Analytical and communicative soft skill. | AI-supported reflective and collaborative learning. |

| Ogunleye et al. (2024) | Evaluative and problem-solving competence. | Authentic assessments beyond AI capacity. |

| Sarwanti et al. (2024) | Deep and rigorous cognitive process. | Guided and responsible AI use. |

| Jayasinghe (2024) | Higher-order reflective problem-solving. | AI-facilitated Socratic dialogue and feedback. |

| Khan et al. (2024) | Analytical judgment and factual evaluation. | Human–AI collaboration with ethical literacy. |

| Avsheniuk et al. (2024) | Multidimensional reasoning (analysis, evaluation, creativity). | Ethical AI–human synergy promoting reflection. |

| Broadhead (2024) | Analytical and interpretive intellectual freedom. | Resistance to AI bias and preservation of autonomy. |

| Furze et al. (2024) | Contextual and evaluative human reasoning. | Human evaluation via AI Assessment Scale (AIAS). |

| Liu and Tu (2024) | Purposeful and reflective judgment. | AI-based SSI learning model encouraging debate. |

| Almulla and Ali (2024) | Active human cognitive engagement. | Pedagogical balance between human and AI reasoning. |

| Zang et al. (2022) | Cognitive understanding (implicit). | AI–5G integration enhancing reflective learning. |

Table 3 presents some information regarding the concept and construct of critical thinking in the AI era, across the reviewed studies. Critical thinking in the AI era is consistently conceptualized as a multidimensional and reflective cognitive process encompassing interpretation, analysis, evaluation, inference, and self-regulation, in line with the frameworks of Facione (20 23) and Paul and Elder (2019). These studies collectively affirm that critical thinking remains a human-centered intellectual and ethical capacity—one that involves questioning assumptions, assessing evidence, and applying metacognitive regulation to reach reasoned judgments.Scholars such as Malik et al. (2023),Ruiz-Rojas et al. (2024),Zhou et al.(2024),Darwin et al. (2024), and Avsheniuk et al. (2024) emphasize that AI can catalyze deeper analysis and reflection when integrated thoughtfully into learning processes. In this sense, AI becomes a cognitive scaffold that encourages learners to compare, verify, and critique machine-generated outputs, thereby reinforcing analytical, evaluative, and inferential dimensions of critical thinking (Costa et al., 2024; Jayasinghe, 2024; Liu & Tu, 2024; Michalon & Camacho-Zuñiga, 2023; Tsopra et al., 2023).

At a broader level, several authors describe evaluative and ethical reasoning as core constructs of critical thinking in the AI era. Studies such as those by Bozkurt et al. (2024),Chia et al.(2024),Atenas et al. (2023),Asamoah et al. (2024),Klimova and de Campos, 2024),Valova et al.(2024), and Werdiningsih et al. (2024) conceptualize critical thinking as the ability to use AI responsibly, incorporating ethical awareness, data literacy, and epistemic sensitivity. These scholars argue that critical thinking now extends beyond cognitive skills to include ethical discernment, transparency, and social accountability in interacting with intelligent systems. Such perspectives echo Paul and Elder's (2019) notion of intellectual virtues—fair-mindedness, humility, and integrity—highlighting that critical thinkers must not only reason effectively but also act ethically in complex technological contexts. Similarly,Broadhead (2024),Räisä and Stocchetti (2024), and Atenas et al. (2023) stress the epistemic dimension of critical thinking, warning that overreliance on AI may erode autonomy and reflexivity, leading to passive acceptance of algorithmic outputs instead of deliberate, evidence-based reasoning.

Meanwhile, a number of studies focus on metacognitive and reflective constructs of critical thinking that emphasize learning through interaction with AI.Authors such as Wang et al. (2024),Zhou et al. (2024),Crudele and Raffaghelli (2023),Borkovska et al. (2024),Banihashem et al.(2024), and Furze et al. (2024) highlight that metacognition—self-regulation, reflection, and awareness of one’s cognitive processes—becomes central to developing critical thinking in the AI-mediated learning environment. By analyzing AI-generated errors or inconsistencies, learners cultivate reflective skepticism and adaptive reasoning. Other studies, including Mirón-Mérida and García-García (2024),Ogunleye et al. (2024), and Sarwanti et al. (2024), identify authentic assessment, problem-solving, and dialogic learning as pedagogical conditions that sustain critical thinking and prevent cognitive dependency on AI. Collectively, these findings reveal that critical thinking in the AI era is constructed through reflective interaction, ethical awareness, and evaluative autonomy, requiring intentional pedagogical strategies to ensure that AI functions as an enhancer—not a substitute—of human reasoning.

Overall, the literature demonstrates a converging view that critical thinking in the AI era integrates three interrelated constructs: (1) analytical-evaluative reasoning, (2) ethical-epistemic awareness, and (3) metacognitive reflection and regulation. These constructs align with the core dimensions identified by Facione (20 23) and Paul and Elder (2019), but are expanded to encompass ethical literacy and technological reflexivity unique to the digital age. While AI provides new opportunities for cognitive stimulation, its pedagogical value relies on human guidance, reflective dialogue, and critical engagement.

Concepts and Constructs of AI-Integrated Critical Thinking in Higher Education

Table 4 summarizes the results of the synthesis of 34 documents that discuss strategies for developing critical thinking in the AI era in higher education.

Table 4. Recommended Strategies for Fostering Critical Thinking in Higher Education in the AI Era

| Author (Year) | Recommended Strategies |

| Tsopra et al. (2023) | Project-based learning as AI-CDSS designers; multidisciplinary integration (clinical, ethical, technical); interactive, innovation-oriented curriculum; cultivation of digital leadership and creativity |

| Malik et al. (2023) | Balanced human–AI collaboration; responsible AI literacy training; active faculty guidance and mentoring; emphasis on academic ethics and integrity; promotion of creativity and self-reflection |

| Al Ka’bi (2023) | Not mentioned |

| Michalon and Camacho-Zuñiga (2023) | Use AI inaccuracies for reflective learning; design activities verifying AI outputs; encourage iterative human–AI dialogue to foster analytical reasoning |

| Chia et al. (2024) | Responsible AI usage training; integration of AI literacy in curriculum; prompt-engineering instruction; treat AI as a supporting—not primary—source; strengthen verification and evaluation habits |

| Mirón-Mérida and García-García (2024) | Conscious and critical AI integration; use of engaging, personalized, paper-based, and oral tasks; building trust and academic integrity in classrooms |

| Atenas et al. (2023) | Embed data ethics and justice in curricula; ethical and socio-technical learning approaches; promote interdisciplinary dialogue and collaboration; empower students to challenge algorithmic inequality |

| Bozkurt et al. (2024) | Critical evaluation of AI outputs; reinforce higher-order thinking and creativity; redesign curriculum and assessment for reflection; foster ethical awareness and AI literacy |

| Crudele and Raffaghelli (2023) | Strengthen argumentative reasoning skills; use Argument Maps for logic visualization; apply hybrid learning environments; build digital literacy and manage cognitive load |

| Michel-Villarreal et al. (2023) | Interactive use of AI for discussion and debate; active teacher supervision; innovative and authentic assessments (“AI-proof”); promote AI ethics and literacy education |

| Räisä and Stocchetti (2024) | Develop epistemic and technological literacy; foster transparency and reflection on AI processes; rehumanize teaching and discussion; integrate critical philosophy of technology; train adaptive skepticism toward uncertainty |

| Quintero-Gámez et al. (2024) | Not mentioned |

| Klimova and de Campos, 2024) | Critical evaluation of AI-generated content; develop prompt literacy skills; train ethical awareness and bias detection; integrate AI into peer feedback and authentic assessment |

| Costa et al. (2024) | Encourage verification of AI outputs; promote ethical AI use; design interactive, collaborative learning; reform curricula and assessments for high-level thinking |

| Valova et al. (2024) | • Responsible and balanced AI integration• Train evaluative and epistemic skills• Enhance educator competency and AI ethics• Embed verification and reflection practices |

| Asamoah et al. (2024) | • Apply DEQ model (Domain Knowledge, Ethical Acumen, Querying)• Encourage reflective AI use• Train effective question formulation (prompting)• Strengthen ethical awareness and institutional guidance |

| Werdiningsih et al. (2024) | Establish ethical AI-use guidelines; promote originality and integrity; encourage critical evaluation of AI suggestions; provide training for ethical, balanced AI integration; maintain human oversight and reflective learning |

| Chaparro-Banegas et al. (2024) | • Active, participatory, experiential learning• Ethical AI integration in dynamic classrooms• Continuous digital and ethical training for educators• Implement inclusive, transparent educational policies |

| Ruiz-Rojas et al. (2024) | Integrate AI pedagogically into curricula; utilize AI tools (ChatGPT, YOU.COM, ChatPDF, Tome AI, Canva) for analysis and collaboration; provide continuous training and ethical literacy |

| Darwin et al. (2024) | Balance AI use with human reasoning; maintain human oversight and skepticism; integrate AI in inquiry-driven, reflective pedagogy |

| Banihashem et al. (2024) | Combine AI and human feedback loops; use AI for descriptive assessment; preserve human contextual judgment; employ rigorous prompt design for reliable output |

| Wang et al. (2024) | Recognize AI limitations; personalize assignments to prevent automation; use demanding evaluation rubrics; train ethical and reflective AI engagement |

| Zhou et al. (2024) | Design user-friendly, purpose-driven AI tools; embed self-regulation strategies in AI-based learning; integrate AI contextually into curricula; train critical evaluation of AI outputs |

| Borkovska et al. (2024) | Personalize learning with AI interaction; use ChatGPT for critical reflection activities; encourage evaluation of AI results; balance AI use with social and emotional interaction |

| Ogunleye et al. (2024) | Redesign authentic and reflective assessments; employ AI for comparative and analytical exercises; revise curricula and enhance faculty AI competency |

| Sarwanti et al. (2024) | Provide structured guidance and training; establish institutional AI-use policies; redesign curricula to embed AI reflectively |

| Jayasinghe (2024) | Use AI for personalized feedback; apply problem-based and Socratic learning; facilitate collaborative discussions and self-reflection; support educator–AI co-teaching models |

| Khan et al. (2024) | Integrate EMIAS for critical information evaluation; combine AI with human judgment (human-in-the-loop); foster AI literacy and ethical policy debates |

| Avsheniuk et al. (2024) | Encourage critical engagement with AI; promote responsible and reflective AI use; maintain balance with traditional pedagogy; emphasize human judgment and creativity |

| Broadhead (2024) | Reinforce deep reading and argumentation; resist intellectual outsourcing to AI; challenge dominant paradigms and biases; critically evaluate technology’s purpose; preserve dialogic, human-centered education |

| Furze et al. (2024) | Apply AI Assessment Scale (AIAS) for ethical integration; center assessments on human reflection; use AI to support—not replace—reasoning; encourage evaluation of AI outputs at multiple levels |

| Liu and Tu (2024) | Implement AI-supported Socio-Scientific Issue (SSI) model; contextualize interdisciplinary learning; promote digital literacy, collaboration, and self-regulation |

| Almulla and Ali (2024) | Use AI complementarily, not substitutively; ensure ethical and balanced integration; strengthen digital literacy and evaluation skills; scaffolded, instructor-guided learning |

| Zang et al. (2022) | Integrate AI and 5G for interactive, personalized learning; promote deeper understanding through data exploration; use technology to enhance analytical reflection |

Table 4 shows that strategies for fostering critical thinking in higher education amid the rise of artificial intelligence (AI) converge around five main domains: (1) responsible and ethical AI integration, (2) curriculum and assessment redesign, (3) guided human–AI collaboration, (4) enhancement of metacognitive and dialogic practices, and (5) development of AI and data literacy. These findings can be interpreted through the conceptual frameworks of critical thinking proposed by Facione (20 23) and Paul and Elder (2019), both of whom emphasize analysis, evaluation, and self-regulation as the foundation of reasoned and ethical judgment.

Responsible and ethical AI integration is the most prominent strategy identified across the reviewed literature (Almulla & Ali, 2024; Chia et al., 2024; Malik et al., 2023; Mirón-Mérida & García-García, 2024; Valova et al., 2024). The authors consistently argue that AI should function as a cognitive tool rather than a substitute for human reasoning. This finding aligns with Paul and Elder's (2019) principle of intellectual autonomy, which positions learners as active, reflective agents in their own thinking processes. Ethical integration involves explicit guidance, transparency, and academic integrity, cultivating what Facione (20 23) calls intellectual responsibility and truth-seeking in learners’ engagement with technology.

Furthermore, curriculum and assessment redesign is viewed as essential to ensure that AI adoption does not diminish cognitive rigor (Bozkurt et al., 2024; Crudele & Raffaghelli, 2023; Ogunleye et al., 2024; Werdiningsih et al., 2024). This strategy emphasizes process-oriented learning and the creation of AI-proof assessments that require originality, logical reasoning, and personal reflection. Such approaches correspond with Paul and Elder’s intellectual standards of depth and significance, reinforcing the idea that critical thinking must emerge from intellectually engaged and contextually grounded learning experiences (Paul & Elder, 2019).

Guided human–AI collaboration also appears as a central strategy to promote reflective skepticism and active reasoning (Banihashem et al., 2024; Jayasinghe, 2024; Michalon & Camacho-Zuñiga, 2023; Tsopra et al., 2023). Under instructor supervision, students engage in iterative interactions with AI—verifying, analyzing, and refining AI-generated outputs. This process strengthens intellectual perseverance and open-mindedness (Facione, 20 23), encouraging learners to construct understanding through dialogic inquiry between human and machine rather than accepting technological authority uncritically.

Additionally, several authors highlight metacognitive and dialogic practices as foundational to sustaining critical thinking in the AI era (Broadhead, 2024; Crudele & Raffaghelli, 2023; Liu & Tu, 2024). Activities such as reflective discussion, debate, argumentative writing, and Socratic questioning promote self-assessment and cognitive regulation. These practices embody Paul and Elder’s notion of thinking about one’s thinking, fostering continuous intellectual self-correction amid technologically mediated learning environments (Paul & Elder, 2019).

Finaly, the development of AI and data literacy emerges as a key dimension of epistemic awareness in higher education (Atenas et al., 2023; Khan et al., 2024; Räisä & Stocchetti, 2024). Understanding algorithmic bias, data justice, and system transparency enables students to assess the reliability, accuracy, and fairness of AI-generated information. In line with Facione's (20 23) emphasis on interpretation and evaluation, AI literacy enhances learners’ ability to navigate complex digital information critically and ethically.

Overall, the reviewed studies indicate that cultivating critical thinking in the AI era requires a balanced pedagogical ecosystem that harmonizes technological advancement with human intellectual agency. The most effective strategies position AI as a reflective partner that enhances and extends human reasoning without undermining intellectual autonomy. Higher education institutions are thus encouraged to integrate ethical and AI literacy modules within curricula, design assessments that foster analytical depth and originality, and strengthen instructor guidance to facilitate critical dialogue between students and technology. Through these approaches, AI functions not merely as a tool of automation but as a catalyst for developing reflective judgment, intellectual integrity, and self-directed thinking—three essential pillars of critical thinking as envisioned by Facione (20 23) and Paul and Elder (2019).

Conclusion

This study aimed to systematically map and synthesize the global research landscape on the development of critical thinking in higher education within the context of artificial intelligence (AI). The bibliometric and content analyses reveal that Education Sciences and Cogent Education are the most productive sources in this domain. At the same time, Education and Information Technologies demonstrates the highest citation impact—highlighting the growing intersection between AI integration, pedagogy, and higher-order cognitive skills. At the document level, highly cited works such as Dergaa et al. (2023) and Michel-Villarreal et al. (2023) have shaped foundational debates concerning generative AI, academic integrity, and reflective engagement. Influential authors including Chan, Marzuki, and Nikolic further exemplify three key scholarly trajectories: human-centered pedagogy, authentic and reflective learning, and integrity-driven assessment. Thematic clustering of recent publications reveals five dominant areas—(1) pedagogical and instructional integration of AI, (2) ethical and evaluative dimensions in academic integrity, (3) technical and application-oriented AI models, (4) institutional accountability and policy frameworks, and (5) socio-technical systems thinking. Emerging themes such as generative AI, federated learning, contrastive learning, and data privacy underscore a transition from tool adoption toward systemic and interdisciplinary inquiry. Future research directions may include investigating ethical architectures for decentralized AI systems, AI-based cognitive analytics for assessing critical thinking, and socio-technical frameworks that connect technological innovation with equity and institutional governance in higher education.

The findings also illuminate how critical thinking is conceptualized and constructed within AI-mediated learning environments. Conceptually, most authors align with the multidimensional frameworks proposed by Facione (20 23) and Paul and Elder (201 9), viewing critical thinking as a reflective, evaluative, and ethical process of reasoning. In the AI era, this construct expands to encompass digital epistemic awareness—the ability to question the credibility, bias, and opacity of algorithmic knowledge. Across the reviewed literature, strategies for fostering critical thinking converge into three integrated approaches: ethically embedding AI in the curriculum, redesigning pedagogy and assessment to prioritize analysis and originality, and developing reflective human-AI collaboration through faculty mentoring processes to facilitate critical dialogue between students and AI. Collectively, these strategies aim to ensure that AI enhances rather than replaces human reasoning, reinforcing intellectual autonomy, integrity, and self-directed inquiry as the cornerstones of higher education.

Despite its contributions, this study acknowledges limitations, as its conclusions are limited to the Scopus database. This single-database analysis, while comprehensive, does not encompass all relevant studies indexed in other databases. Consequently, the thematic patterns and emphases identified in this study are interpreted as representative of existing research. Future studies could address this limitation by incorporating multi-database searches (e.g., Web of Science, Dimension, ERIC, and others).

Overall, the study offers important implications for higher education policy, curriculum design, and teaching practice. Universities should integrate AI and data ethics modules within curricula to cultivate responsible digital citizenship; instructors should adopt dialogic, inquiry-based pedagogies that engage students in evaluating and contextualizing AI outputs; and assessment systems should emphasize process, reasoning, and originality rather than automated efficiency. At the institutional level, transparent policies and continuous professional development programs are essential to maintain academic integrity and intellectual rigor in AI-augmented learning environments. Collectively, these measures will help position AI as a catalyst for reflective judgment and ethical reasoning—supporting the enduring mission of higher education to nurture thoughtful, autonomous, and critically engaged learners in the digital age.

Gratitude is extended to the Center for Higher Education Funding and Assessment, Ministry of Higher Education, Science, and Technology (Pusat Pembiayaan dan Asesmen Pendidikan Tinggi/PPAPT, Kementerian Pendidikan Tinggi, Sains dan Teknologi/Kemdiktisaintek) and the Education Fund Management Institution (Lembaga Pengelola Dana Pendidikan/LPDP) of the Republic of Indonesia for their assistance and financial backing in facilitating the author's academic pursuits.

Conflict of Interest Declaration

All author affirms that there are no conflicts of interest in the composition and dissemination of this study.

Funding

This study was conducted without financial support from any funding agency, whether governmental, commercial, or non-profit.

Generative AI Statement

During the preparation of this manuscript, AI-based tools such as ChatGPT-5 were utilized to enhance readability, and Grammarly was employed to ensure grammatical accuracy. All outputs generated by these tools were subsequently reviewed, revised, and validated by the authors. The authors retain full responsibility for the accuracy, integrity, and content of the final published work.

Authorship Contribution Statement

Sitepu: Conceptualization, Formal analysis, Methodology, Writing – original draft.Prasojo: Supervision, Validation, Data curation. Hermanto: Supervision, Validation, Data curation. Salido: Validation, Data curation, Writing – original draft.Nurhakim: Methodology, Resources, Investigation.Setyorini: Formal analysis, Investigation, Writing – review & editing.Disnawati: Visualization, Writing – review & editing.Wiratsongko: Software, Investigation.