Introduction

The 2020/2021 academic year presented significant changes in the way university teaching was conducted due to the COVID-19 pandemic, which compelled higher education institutions to adapt their face-to-face courses to online platforms (Dapena et al., 2022). As a result, many activities involving contact with people, both at university and at work, were affected. In this exceptional context, the transition from face-to-face to emergency remote teaching (Pérez et al., 2021)presented a challenge for all levels of education, including higher education (Compare & Albanesi, 2022), as well as their Service-Learning projects.COVID-19represented a crisis where academia, beyond health and economics, had to adjust and choose between connecting with reality or remaining within its traditional institutional framework.

In light of such profound and unforeseen changes, the S-L projects originally intended for in-person implementation in most universities could not proceed as scheduled (Waldner et al., 2012). However, in the case of our research, they had been designed for online teaching from the outset. As a result, they were executed as planned during the 2020-21, 2021-22,and 2022-23 academic years, as part of the Teaching Innovation Group (TIG 2016/16) at Universidad Nacional de Educación a Distancia (UNED, National University for Distance Learning. Additionally, the widespread use of new technologies in Higher Education has been accelerated by the pandemic. Within this context, the present study aims to analyze students’ perceptions of three virtual Service-Learning experiences, in collaboration with the Madrid City Council, using data collected and processed through digital tools such as Google Forms, Microsoft Teams, and NVivo.

The evaluation of vS-L through a mixed-methods approach, as implemented in this research, is particularly significant because, to our knowledge, existing studies assessing virtual Service-Learning projects do not employ such an approach. The combination of quantitative and qualitative tools in the evaluation process enables the triangulation of findings, providing more robust and corroborated information. Consequently, the more comprehensive the evaluation results, the easier it becomes to strengthen the project’s positive aspects and mitigate its drawbacks in future iterations.

Recent comparative studies underscore this need. Ngai et al. (2023) analyzed 1,364 students in Hong Kong, comparing face-to-face and virtual Service-Learning during the pandemic through a pretest–posttest design based exclusively on validated self-report scales, without incorporating qualitative techniques that could enrich the interpretation of results. Similarly, Riajiet al. (2024) evaluated the impact of e-SL in engineering using a limited mixed-methods design (small sample sizes and t-test analyses complemented by interviews), concluding that the effects on technical competencies were equivalent across virtual and face-to-face modalities, yet without thoroughly exploring the underlying formative processes.

In the Spanish-speaking context, Andrade-Zapata and López-Vélez (2020) documented the adaptation of a face-to-face S-L project to the virtual modality during the pandemic in Ecuador through a descriptive case study. Although their analysis highlights creativity in virtualization and student protagonism, it lacks objective metrics and methodological triangulation to assess learning outcomes or community impact. These patterns align with Faulconer’s (2020) decade-long review of e-SL research, which critiques the field for relying predominantly on anecdotal evidence or self-reported measures, small samples, and limited consideration of long-term effects.

Moreover, some works associated with European initiatives, such as Culcasi et al. (2023) and Aramburuzabala et al. (2024), have proposed design frameworks for integrating virtual S-L with sustainability and inclusive digital education, highlighting the need for methodologically rigorous evaluations that combine quantitative and qualitative data. Nevertheless, these studies remain largely theoretical or co-design oriented, lacking direct empirical applications that demonstrate their effectiveness.

Against this backdrop, our methodological approach offers a mixed empirical evaluation within a European context, contributing both to the vS-L literature and to the practical improvement of such experiences. The integration of quantitative questionnaires with qualitative analysis via NVivo enables the identification of not only general trends in student satisfaction and perceptions but also nuanced discourses that inform future improvements in vS-L design and implementation. In line with Bukas Marcus et al. (2020), this approach facilitates a deeper understanding of the strengths and areas for enhancement in these projects, thereby optimizing their sustainability and impact.

In summary, our study is distinguished by its use of a mixed-methods evaluation approach, which remains uncommon in virtual Service-Learning research, combining quantitative metrics with structured qualitative analysis. It directly addresses the gaps identified in recent reviews (Camilli-Trujillo et al., 2022; Faulconer, 2020) concerning the limited methodological triangulation in this field, while providing European empirical evidence in an area largely dominated by Anglo-Saxon and Asian studies. Furthermore, it incorporates advanced technological tools, such as Google Forms, Microsoft Teams, and NVivo, as evaluative supports, aligning with ongoing trends of digitalization in higher education.

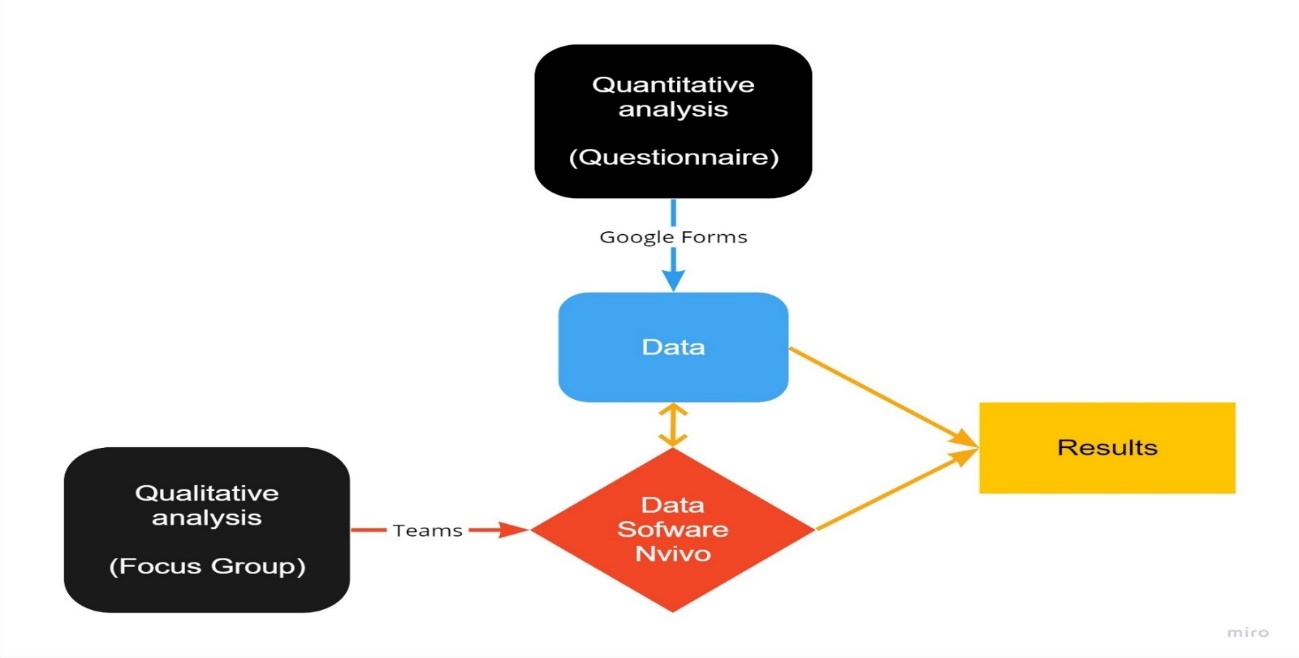

Therefore, from a methodological standpoint, as previously noted, this study introduces a significant innovation: the application of a mixed-methods approach to evaluate the outcomes of three fully online projects. The design combines a quantitative assessment questionnaire with a qualitative focus group, both of which are conducted virtually. Several digital tools supported the research process: (a) the UNED a LF platform, (b) Microsoft Teams for videoconferencing, and (c) NVivo software, which was used to triangulate the data obtained from the Google Forms online questionnaire and the focus group sessions. In this regard, the study is situated within the framework of Technology-Enhanced Learning as defined by Goodyear and Retalis (2010). Moreover, it entails significant changes in the learning model, fostering student training while transforming the traditional role of instructors (Dagnino et al., 2020), who are now subject to evaluation by their students. The adoption of a mixed-methods approach involved an intentional integration (Ramírez-Montoya & Lugo-Ocando, 2020), strategically leveraging the complementary strengths of both questionnaires and focus groups. This represents a methodological innovation in the evaluation of virtual Service-Learning, promoting a more holistic interpretation of its assessment (Ramírez-Montoya & Lugo-Ocando, 2020). The remainder of this paper is structured as follows: Section 1 reviews related studies employing mixed-methods evaluation; Section 2 outlines the methodology adopted; Section 3 presents the main results; Section 4 discusses the findings; Section 5 provides the conclusions; and Section 6 lists the references.

Literature Review

The evaluation of S-L projects in online environments has become increasingly relevant in educational research. Faulconer (2021) notes that, despite the limited number of studies focusing on evaluation, several benefits have been observed concerning online focus groups. These benefits include academic improvements such as a deeper grasp of content knowledge, the development of work and teamwork skills, increased career opportunities, and personal growth, leading to enhanced self-efficacy.

Regarding the evaluation of these projects, the literature differs in its views on the outcomes for students in face-to-face versus virtual settings. For example, Lin et al. (2022) have shown that online Service-Learning projects demonstrate similar positive student outcomes compared to traditional Service-Learning. However, Shek et al. (2022) have highlighted that students may hold differing opinions about the effectiveness of the online curriculum, instructor performance, and the project's contribution to achieving learning outcomes. Therefore, these subjective perspectives of students are essential for assessing the effectiveness of our projects, and their evaluation is of particular interest. As demonstrated by Resch and Schrittesser (2021), when students actively engage in addressing real-world needs, their perception of the university as an institution socially committed to its community is strengthened.

In this context, evaluating the implementation process, as outlined by Scheirer (1994), is essential to determine whether all components of a Service-Learning project are effectively executed and reach their intended target audiences. However, there is a notable scarcity of studies examining the implementation process of virtual Service-Learning projects (Linnan & Steckler, 2002), as most research has traditionally focused on the outcomes of face-to-face S-L initiatives. Moreover, the systematic evaluation of all components of an S-L project has not received sufficient attention in scholarly literature.

At the same time, the widespread adoption of digital technologies in higher education has been accelerated by the pandemic, which has driven the expansion of vS-L initiatives. Consequently, it is pertinent to explore students’ perspectives on vS-L projects as comprehensively as possible by combining quantitative and qualitative tools to capture their views.

According to this backdrop, our study presents the evaluation results of three vS-L experiences conducted in collaboration with the Madrid City Council. Data collection and analysis relied on technological tools such as Google Forms, Microsoft Teams, and NVivo, and employed a mixed-methods evaluation model combining quantitative analysis (structured questionnaires) with qualitative analysis (thematic coding of open-ended responses). This approach enabled data triangulation and the generation of stronger, corroborated evidence.

Such an approach is particularly relevant given that, as Camilli-Trujillo et al. (2022) point out in their systematic review of 93 S-L studies, only 16.1% explicitly reported mixed-methods designs, highlighting a clear methodological gap in the evaluation of such experiences. In response, this study directly addresses this gap by offering an evaluative model that moves beyond purely quantitative surveys or isolated narrative descriptions, which dominate much of the vS-L literature.

Studies such as the one presented here can enhance the success of vS-L projects by identifying issues in their implementation quality that can subsequently be addressed. In this sense, our research aligns with studies such as those by Culcasi et al. (2021), who emphasize the importance of mixed-methods designs, since their use enables evaluators to gain a deeper understanding of results by integrating multiple evaluation techniques and data sources.

These approaches, as emphasized in the literature, facilitate data triangulation from multiple perspectives, thereby enriching the understanding of both project outcomes and implementation processes. However, despite its potential to overcome the limitations of single-method evaluations, triangulation remains underutilized. As Kankaraš et al. (2019) note, triangulation is still rarely employed, partly due to practical constraints such as resource availability, the time required to integrate diverse techniques, and the analytical complexity of coherently combining quantitative and qualitative information. Additionally, they argue that the dominant methodological tradition in educational evaluation has historically favored single-measurement instruments, which has limited the adoption of more rigorous mixed approaches, such as triangulation, despite their ability to offer richer and more nuanced insights into the phenomena under study.

In this regard, data triangulation—applied here to integrate qualitative and quantitative dimensions—is highly relevant in educational research, as noted by Greene and McClintock (1985). Following their recommendations, our study combines questionnaires and focus groups to capture complementary aspects of the evaluation process. This dual approach enables cross-validation of findings from each method, reinforcing the credibility and internal validity of the results. By integrating these perspectives, we address potential biases inherent in single-method evaluations and offer a more comprehensive understanding of both outcomes and implementation processes in vS-L projects.

This aligns with our research objectives, which aimed not only to measure outcomes but also to explore participants’ lived experiences and the contextual factors influencing the effectiveness of virtual Service-Learning. Triangulation thus serves as a methodological bridge, strengthening the robustness of our conclusions and supporting the broader goal of generating actionable knowledge to improve educational practices in online environments.

Accordingly, this study presents a significant innovation in evaluating both traditional and fully online S-L projects. The proposed model also captures an element often overlooked by closed educational evaluation paradigms: the lived experience of participants, understood as a key source of insight into the real impact of virtual Service-Learning.

This perspective underscores the novelty and relevance of the research, positioning it as a substantial contribution to vS-L evaluation and as a replicable model for future iterations and varied institutional contexts. It moves beyond pandemic-driven adaptations by contributing to the consolidation of a stable model for online Service-Learning in both online universities and campus-based institutions seeking to integrate digital experiential learning.

Our research applies a mixed evaluation model grounded on two pillars: (1) asynchronous evaluation questionnaires (quantitative) completed by students and (2) synchronous, 90-minute online focus groups (qualitative) with 5–6 students each. Within this framework, the design integrates findings from a comprehensive evaluation conducted by the students themselves between 2020 and 2023. This evaluation draws directly from their narrated experiences, aiming to foster transformative learning for all stakeholders—particularly the University—through improvements identified in their feedback.

Methodology

As previously mentioned, the S-L projects developed involved students proposing indicators to evaluate three social programs implemented by the Madrid City Council, namely the Family Meeting Points (2020-21 academic year), the Family Support Centers (2021-2022 academic year), and the Family Card (2022-23 academic year), respectively. Both projects and their evaluation, conducted through a mixed-methods approach (quantitative-qualitative), with the results presented here, were entirely online (González-Rabanal & Acevedo Blanco, 2023; UNED, 2020). Thus, from a methodological perspective, the research falls under Type IV Learning and Service experiences (also referred to as Extreme Learning and Service) according to Faulconer (2021) and Waldner et al. (2012). This means that both its implementation and evaluation are virtual.

To address the challenge of providing community service (in this case, to the Madrid City Council) within a non-face-to-face teaching model, it was crucial to meticulously plan all the tasks students needed to perform and to support and motivate them virtually (González-Rabanal & Acevedo Blanco, 2023). It was also necessary to redefine the instructor's role as a facilitator and to provide flexibility to adapt learning experiences to the learners' interests (Reeves & Reeves, 2008).

We considered a proposal of indicators to evaluate public programs with social content implemented by the City Council because:

a) This approach to the service enables the projects to be fully performed online and properly during the pandemic, unlike those from other universities, which required face-to-face teaching that the lockdown did not allow.

b) Part of the syllabus of the subjects taught by the teachers involved in the development of the projects is devoted to the design and evaluation of public policies and programs with a social character.

c) The service associated with the three projects will improve the students’ understanding of the indicators and their awareness of the Sustainable Development Goals (SDGs) they are related to.

d) The fact of proposing indicators to evaluate some of the Madrid City Council social programs allows students to become aware of their professional responsibilities in the future.

e) The use of indicators contributes to making the management of the Madrid City Council more transparent and accountable to citizens.

f) In addition, the implementation of this initiative allows for the transfer of knowledge to society in the field of Social Sciences (UNED, 2020).

After the projects were completed, they were assessed by the students through a mixed quantitative and qualitative online approach, following the recommendations of Fielding (2012) and Verd and López (2008). The findings from this evaluation are detailed later in this manuscript. During these three academic years, a total of 99 students participated in the projects, with 67 completing the questionnaire and 73 participating in the online focus groups.

Participants in both the questionnaire and the online focus groups participated voluntarily; their selection was not made by the teaching team, nor were any selection criteria applied. However, their anonymity was preserved through the signing of a confidentiality agreement.

The questionnaire, available on Google Forms, facilitates establishing a link between the concept and the procedure, which promotes constructive feedback (Cubero-Ibáñez & Ponce-González, 2020). This enables teachers in charge of implementing the projects to be aware of the progress made. This way, the Service-Learning projects assessment is one more stage in the learning process for the improvement of possible future experiences with this teaching innovation methodology, carried out by our TIG 2016/16.

The online assessment questionnaire, completed voluntarily by the students, included several of the twelve dimensions proposed by Rubio et al. (2015). It was anonymous and initially consisted of 37 questions during the 2020–21 academic year. In addition, during the 2021–22 and 2022–23 academic years, seven additional items were incorporated to evaluate the improvements implemented in the project based on students’ feedback from the previous year.

The expansion of the questionnaire aimed to explore aspects that had not been initially considered but were deemed relevant in light of the first cohort’s responses, in order to refine the design of future projects. For example, based on students’ suggestions, a synchronous Microsoft Teams session was introduced at the beginning of the course, featuring a representative from the Madrid City Council involved in the project, to allow students to pose questions directly. This led to the inclusion of new items specifically designed to assess this activity.

Importantly, the addition of these items does not affect the comparability of the results presented in this study, as only responses to the core set of questions common to all iterations of the questionnaire were used for the analyses.

The items were grouped under several headings: (a) student profile, (b) motivation for enrolling in the degree and participating in S-L, and (c) students’ opinions on various aspects of the projects, including:

the selection process,

activities and tasks undertaken,

the perceived relevance and interest of the project,

perceptions of the additional workload generated by the project,

the project’s contribution to their learning outcomes,

whether they would recommend the experience to other students,

support provided by the teaching staff,

whether similar initiatives should be implemented in other courses, and

overall satisfaction with the experience.

Additionally, students were asked about the attractiveness of UNED and online learning more broadly. All participants completed every item of the questionnaire.

The questionnaire comprises a mix of closed-ended questions, Likert scale questions rated from 1 to 5, and open-ended questions. The questionnaires can be accessed via the following links for the respective academic years:

course 2020-21:https://forms.gle/TuVXha9a2mnD5Juc6

course 2021-22:https://forms.gle/jzG8K2FF9USWFKB87

and course 2022-23:https://forms.gle/g5sWoNLfXqC6GmS99

To conduct the focus groups, a formal virtual discussion environment was recreated, one in which each student participated from a computer, tablet, or other electronic device. This setting ensured that the debate remained analytically meaningful (Stewart & Shamdasani, 2017) while preserving the naturalness and fluidity of group interaction. The moderator guided participants’ contributions around three main rubrics, which are explained in detail below.

The focus groups were conducted as 90-minute online sessions with 5–6 students per group. This size aligns with methodological recommendations for virtual focus groups, which indicate that smaller groups (4–6 participants) foster interaction, enable more in-depth responses, and facilitate effective turn-taking management in online settings (Schulze et al., 2022). As these authors highlight, in virtual contexts, larger groups can impede dynamics and limit equitable participation, whereas excessively small groups reduce the diversity of perspectives necessary to enrich the discussion.

The script of the interview, necessary to obtain the needed feedback and encourage the group to discuss the questions, was planned by our TIG 2016/16 around the following three dimensions:

a)Their participation in the projects.

b)The service provided, which consisted of proposing indicators to assess the Family Meeting Points (course 2020-21), the Family Support Centers (course 2021-22),and the Family Card (course 2022-23), three social programs of the Madrid City Council.

c)S-L as a methodological tool to deepen the design of indicators to assess social programs.

The software NVivo was used for processing the opinions obtained in the discussion groups, because it is especially suitable for this qualitative methodology.

With the use of these tools, we have aimed to:

- Determine the aspects they most value, which they consider can be improved, and which they propose to add in future instances.

- Verify if the results obtained through both assessment methods (quantitative and qualitative) are consistent.

For this purpose, the flow of the research procedure was as follows:

Figure 1. The Flow of the Investigation Procedure

The qualitative data were initially processed through automatic emergent coding in NVivo, which enabled the identification of recurring patterns and concepts within the focus group transcripts. This initial coding was subsequently reviewed and refined using axial coding, with the codes organized around predefined analytical dimensions. In this phase, the most representative verbatim responses were selected and integrated with the quantitative questionnaire responses in a mixed-data panel, enabling triangulation between the two data sources. This procedure enhanced the validity of the findings and facilitated a more comprehensive interpretation of the results by combining numerical evidence with the contextual richness of participants’ expressed views.

The data obtained after applying this research flow are not quantitative because they only reflect the opinions of the students who participated in the S-L projects and voluntarily agreed to complete both the evaluation questionnaire and participate in the online focus groups.

Table { SEQ Tabla \* ARABIC } . Profile and Participation of vS-L Students by Academic Year (2020–2023)

| Variable | 2020–21 | 2021–22 | 2022–23 |

| Subjects (n) | Public Management: 9, Social Policies: 17 | Public Management: 30, Planning and Assessment of Social Services: 15 | Public Management: 15, Planning and Assessment of Social Services: 13 |

| Questionnaires (n) | Public Management: 9, Social Policies: 10 | Public Management: 23, Planning and Assessment of Social Services: 10 | Public Management: 7, Planning and Assessment of Social Services: 8 |

| Focus groups (n) | Public Management: 12, Social Policies: 5 | Public Management: 15, Planning and Assessment of Social Services: 17 | Public Management: 10, Planning and Assessment of Social Services: 14 |

| Gender (%) | Male: 57.9, Female: 42.1 | Male: 57.6, Female: 42.4 | Male: 73.3, Female: 26.7 |

| Age (%) | <25: 21.1; 25–34: 5.3; 35–44: 21.1; 45–54: 42.1; 55–64: 10.5 | <25: 6.1; 25–34: 15.2; 35–44: 30.3; 45–54: 39.4; 55–64: 6.1; >64: 3.0 | 25–34: 6.7; 35–44: 26.7; 45–54: 60.0; 55–64: 6.7 |

| Educational level (%) | Bachelor’s degree: 26.3; Undergraduate degree: 15.8; Postgraduate: 26.3; Vocational training: 10.5; Doctorate: 5.3; Other: 15.8 | Bachelor’s degree: 12.1; Undergraduate degree: 21.2; Postgraduate: 9.1; Vocational training: 3.0; Other: 55.6 | Bachelor’s degree: 26.7; Undergraduate degree: 33.7; Vocational training: 26.8; Other: 13.2 |

| Employment status (%) | Employed: 73.7; Unemployed: 5.3; Long-term unemployed: 5.3; Other: 15.8 | Employed: 81.8; Unemployed: 9.1; Long-term unemployed: 3.0; Other: 9.1 | Employed: 73.3; Unemployed: 20.0; Seeking employment: 6.7 |

Note.Data from authors’ own elaboration.

Results

Experience Through the Use of the Mixed Method

The main novelty of our research lies in the fact that, to our knowledge, quantitative and qualitative evaluation tools have not been previously used to assess Service-Learning projects developed entirely online, related to public welfare policies. The main results obtained from the questionnaire and the focus groups, as conclusive assessment tools of the innovation experiences developed, can be summarisedas follows:

a) Results of the Assessment Questionnaire

It can be said that, in general, the answers to the questionnaires reveal that students value the experience very positively, as they mostly consider it very rewarding and the effort to be worth it (see Table 2, which shows in detail the results of the students’ opinions on the three S-L projects). The students reported that, despite the additional workload associated with participating in the project, the scheduled tasks were essential to its development, were not excessive, and could be carried out at low cost; therefore, they indicated that they would not eliminate any of them. This aligns with their positive assessment of the project’s design, whose structure they considered appropriate.

As previously noted, this study does not constitute a statistical analysis but rather a qualitative inquiry aimed at exploring participants’ perceptions of the implemented vS-L experiences. The limited statistical data were drawn from the closed-ended items of the questionnaire, some of which are presented in Table 2. Notably, students did not express negative views about the project —a finding reflected in the consistently high levels of satisfaction reported across all editions—. Some students suggested including a visit to the facilities of the Madrid City Council to meet the program managers in person. In response, a synchronous session via Microsoft Teams was introduced at the beginning of the project, bringing together students, members of the teaching team, and a representative from the City Council. As mentioned earlier, this initiative led to the inclusion of specific items in the questionnaire, allowing students to evaluate this new component. Others proposed that the City Council provide feedback on how the indicators generated through the students’ work —used to evaluate the social programs under study— had been applied in practice and to what extent they proved useful.

Across the three projects, the primary reasons for participating were, first, to complement their theoretical training; second, to gain a deeper understanding of the nature of this type of educational experience; and third, to develop protocols for applying and implementing the S-L methodology. These motivations reflect students’ interest in enhancing their education—aligned with the objectives of the Bologna Process— improving their professional preparation and acquiring skills to design indicators for assessing social programs.

The key findings from the questionnaires for the three projects are presented in the table below. The first column reproduces the wording of the questions included in the questionnaire, focusing on the most relevant items related to students’ motivation to participate —given that participation was voluntary— as well as aspects concerning project structure, the support received from the teaching team, the workload involved, and whether they found the experience engaging. Based on these perceptions, students were also asked whether they believed such initiatives should be implemented in other courses. The final item reports their overall evaluation of the experience, which, as shown, is highly positive.

Table 2. Comparative Analysis of the Results of the Questionnaire in Years 2020-21, 2021-22, and 2022-23

| AssessedAspect | Year 2020-21Meeting Points | Year 2021-22Family Support Centers | Year 2022-23 Family Card |

| Which have your motivations to join the experience been?(Order of preference) | 1. To complete theoretical training. 2. To improve my training for the professional world. 3. To know what this kind of educational experience consists of. 4. To learn the applicability of S-L in the UNED.5. To know the design and creation of indicators to assess social programs. 6. To build protocols to apply and execute the S-L methodology. | 1. To complete theoretical training. 2. To know the design and creation of indicators to assess social programs.3. To know what this kind of educational experience consists of.4. To improve my training for the professional world. 5. To learn the applicability of S-L in the UNED.6. To build protocols to apply and execute the S-L methodology. | 1. To complete theoretical training.2. To know the design and creation of indicators to assess social programs.3. To know what this kind of educational experience consists of.4. To improve my training for the professional world. 5. To learn the applicability of S-L in the UNED.6. To build protocols to apply and execute the S-L methodology. |

| Do you know the selection process? | Yes 73.7% | Yes: 84.8%I am not sure what the selection criteria have been: 9.1% | Yes: 93.3%I am not sure what the selection criteria have been: 6.7% |

| Do you consider the structure of the project is suitable? | Yes: 73.7%I do not have an informed opinion to answer: 26.3% | Yes: 93.9% | Yes: 80%I do not have an informed opinion to answer: 13.3% |

| Do the project tasks mean much workload? | Yes: 10.5% Yes, they do, but I must admit that they are essential for learning S-L: 42.1%No: 10.5%The tasks are not too many and they can be done pleasantly: 31.6% | Yes: 27.3% Yes, they do, but I must admit that they are essential for learning the S-L: 33.3%No: 9.1%The tasks are not too much, and they can be done pleasantly: 27.3% | Yes: 6.7%Yes, they do, but I must admit that they are essential for learning the S-L: 60%No: 6.7%The tasks are not too much, and they can be done pleasantly: 20% |

| Would you eliminate any task? | No: 94.7% | No: 93.9% | No: 86.7% |

| Was the effort worth it? | Yes: 100% | Yes: 90.9%Maybe: 9.1% | Yes: 80%Maybe: 13.3% |

| How do you value the support from the teaching staff? | Great support in all aspects asked | Great support in all aspects asked | Great support in all aspects asked |

| Do you think S-L should be applied to other subjects? | Yes: 89.5%Maybe: 10.5% | Yes: 63.6%Maybe: 27.3%No: 9.1% | Yes: 60%Maybe: 40% |

| What is your overall evaluation of the experience? | Very enriching: 84.2% Generally positive: 15.8% | Very enriching: 87.5% Generally positive:12.5% | Very enriching: 46.7% Generally positive:46.7% |

Note: Authors’ elaboration from the students’ responses.

We find the questions posed to the students particularly relevant regarding the following aspects: (a) The structure, content, and execution of the projects. (b) The effort made during participation in the project. (c) The assessment of their personal experiences in such involvement. In the following three sections, we delve deeper into these three areas.

b) Results of The Online Focus Group

Our focus groups were carried out via online video calls —defined by byGegenfurtner et al. (2020) as lessons to which both teachers and students connect and interact in real time thanks to the use of virtual platforms and applications—, using the Microsoft Teams platform, and were organized in the following three dimensions:

a)Their participation in the projects (see Table 3).

b)The service provided, which consisted of proposing indicators to assess the Family Meeting Points, the Family Support Centers, and the Family Card, three social programs of the Madrid City Council (see Table 4).

c)S-L as a methodological tool to deepen the design of indicators to assess social programs (see Table 4).

The results of the focus groups are organized based on the participants’ responses to the three rubrics that guided their contributions, while preserving the verbatim wording of their statements. The students said: a) they found S-L fascinating; b) they did something to help society which returned to them, which was great; c) they learnt and acquired parameters for improvement; d) S-L was a point infavourof the subject and they were thankful for using this methodology; e) it may be interesting to apply this experience to other subjects in the Degree; f) this way, it would be possible to assess the services and make proposals for improvement, as long as the Government Administration allows it.

As previously noted, the focus group moderator guided participants’ contributions around three dimensions to avoid dispersion. Accordingly, the following tables reproduce selected verbatim excerpts from students’ responses pertaining to each of these dimensions.

First Dimension: Their Participation in the Projects

Table 3. Verbatim Opinions on the Experience of Participating in a Service-Learning Project

| Verbatim opinions Project 2020/2021 | Verbatim opinions Project 2021/2022 | Verbatim opinions Project 2022/2023 |

| I found S-L fascinating, so as soon as I saw the proposal, I searched for it on Google to find out exactly what it was. And it is absolutely encouraging for me to be part of something that is really going to have consequences in society. | I completely agree; I find it so educational and useful that I think it is too brief and limited to just one subject. I have liked it a lot, a very nice way to learn and study. | Obviously, I value this methodology. Especially considering how different it is from the usual study method in distance learning universities. This new way of learning ultimately absorbs you. |

| I was not familiar with the S-L methodology; I came to know it through this work. I did not know this educational method before, and I think that it can be very interesting, as in addition, it has an aid character towards society. You not only learn a subject but also work with people who have some kind of need, and I consider it highly interesting. | As I have already said, I found it really interesting because I think it is something different. Instead of just sitting in front of a 500-page book, you do a more practical job. I have liked it. | It surprised me because I was unaware of it. It is true that, in the field I work in, active employment policies can have a social dimension, as our main audience consists of people seeking employment, many of whom belong to vulnerable groups. I liked it. Really, I liked it. |

| What most catches my attention is the combination of learning and service. I think it should be compulsory in education as this allows us to apply the theoretical concepts. You can study a lot and get good knowledge on the subject, but if you do not apply it afterwards, it is not as useful. I found S-L very, very interesting. | At the beginning, I was a bit worried about being able to assess something related to Madrid while I was in Mallorca. But then, while I was working on it I could compare it with the situation here or personal experiences and, in the end, the conclusion is that it has helped me a lot. It is reciprocal: from University, you do something to help society and then it goes back, which is great. | For me, stepping out of my comfort zone has been an enriching experience. I am happy to have done it and I think it’s great. |

Note: The transcriptions show literally the students’ opinions.

Second Dimension: The Service Provided, Which Consists of Proposing Indicators to Assess the Family Meeting Points, the Family Support Centers,and the Family Card, Social Programs of The Madrid City Council

Table 4. Assessment of the Experience in the Creation of Indicators. (Results of the Projects)

| Verbatim Opinions Project 2020/2021 | Verbatim Opinions Project 2021/2022 | Verbatim Opinions Project 2022/2023 |

| It seems very difficult to make any change. However, the Madrid City Council has sought a proposal for change with indicators, a proposal to improve, which has also reached us, so I feel fortunate. | Having worked with indicators has made me aware of the existence of this kind of help, which we would not otherwise know. For this reason, I think that this should be widely published, not only in Madrid, but in every Community. | It is essential for professionals to know how to create indicators to evaluate our actions, as resources in social policy are finite and we must use them efficiently and with quality. |

| Thank you very much. To me, it has been a very practical experience with which I have learned some knowledge I was lacking. At the beginning, it was difficult for me to understand the meaning of the indicators but later, thanks to the sessions held, I started to understand them and learn about their usefulness in the third sector projects. | We have really learned a lot, we have provided a service, and gained increasing knowledge. This is also a new learning model, a new way to assess performance and even accountability. You learn and also acquire parameters for improvement. It is a much more attractive way to learn how to work. | Evaluation and the creation of indicators are crucial. We need to assess whether the resources are being effective. It is a complex process that requires practical skills. |

| This method for building indicators is very appealing to me.I think that, as stated by many mates, there is a great lack of information in Public Management. We can propose quality indicators in line with common sense, that is, study if the real goal of Family Support Centers is really achieved. | It is a useful tool for daily work in which the indicator represents data collecting, the way it is going to be processed and which the conclusions will be, and then all these can be used again. The truth is that for me it is perfect. | I find it essential to study theoretical content and also have the opportunity to put it into practice. To be told ' this is how it's studied' and then see how it's actually used. For example, with the indicators, although we read a lot about how to create them, when I tried to do it myself, doubts arose. I wanted to create them, but I didn't know exactly how to formulate them to be valid. Even though I had the knowledge, I lacked the proper development. |

Note: The transcriptions show literally the students’ opinions.

Third Dimension: Service-Learning as a Methodological Tool to Deepen in the Design of Indicators to Assess Social Programs.

Table 5. Assessment of the Service-Learning Experience as a Methodological Tool

| Verbatim Opinions Project 2020/2021 | Verbatim Opinions Project 2021/2022 | Verbatim Opinions Project 2022/2023 |

| Apart from the FMPs, many other issues can be addressed, and it is very enriching. Or at least this is what I feel. In addition, I have gained good training, I have reinforced my knowledge and it has been very useful to me. | In my opinion, it is a point in favor of this subject, and I am thankful for using this methodology. I have liked a lot to take part in it, and now that I have to study for the exams, I have a new approach for doing so thanks to this experience. | I see this project as very important for our training. You finish a degree and don't know where to start in the job market, especially in the private sector or the third sector, where you have to create projects or evaluations. The theory learned in the degree is not enough; you need ongoing training. This is very necessary in Higher Education, especially at UNED, where you don't have as much direct contact with professors. |

| In conclusion, it may be interesting to apply this experience to other subjects in the degree. I have studied several degrees in the UNED, and this experience would have been very useful and innovative for me in other subjects. | It seems a very pleasant experience to me, although I am influenced by my professional experience. I consider it a methodology which can be very beneficial, which allows you to practice, and, above all, it is absolutely necessary if assessment indicators are developed, and they may take professionals without experience much closer to the reality of the third sector. | As a resource, it seems very interesting and dignified, allowing families to decide what they buy. In Barcelona, we have the 'community tail rebounds', where families not only collect food but also choose products in a supermarket according to their preferences. |

| I think that a S-L experience is more interesting than the usual assessment works, as in S-L all the students participate in the same collaborative work instead of doing different projects. | At University, we have a theory about what it should be and sometimes it is a bit far from the reality of the services. This way, we could assess the services and make proposals for improvement, as long as the Government Administration allows it. | In summary, the S-L experience is very interesting. I have a significant doubt about the final outcome, whether it will be useful for something, and what the effectiveness and efficiency of this work will be. And certainly, yes. I tell you that indicators are the basis of continuous improvement in any organization, both industrial and Public Administration. |

Note: The transcriptions show literally the students’ opinions.

Discussion

The results of this study demonstrate that using a mixed model (quantitative and qualitative) to evaluate S-L projects with online methodologies in the university setting is entirely feasible. Thus, students rate the experience very positively.

As we have already highlighted in our research, we employed online focus groups to explore the vS-L experiences as a digital tool to enhance students’ learning in Higher Education, rather than using mixed methods to assess webinars (Gegenfurtner et al., 2020). Our mixed-method approach aligns with the rational reconstruction proposed by Rubio et al. (2015) for conducting self-assessment of S-L projects, which integrates empirical material and theoretical reasoning through several phases. Our methodological steps involve identifying the relevant aspects to be assessed, formulating appropriate questions, and collecting, systematizing, and interpreting the results obtained.

The methodology applied here also includes some of the tools used by Mayor (2020) to collect students’ opinions, in particular, the assessment questionnaire; however, it replaces semi-structured group interviews with online focus groups. This approach enables a thorough evaluation to be carried out (Cubero-Ibáñez & Ponce-González, 2020), and the results of both tools (the questionnaire and the focus group) were compared and integrated. In this sense, our study is part of the methodological approach that surpasses the traditional confrontation between qualitative and quantitative methods of evaluation for obtaining data (Verd, 2022), and it also supports the integration of both methods in a mixed strategy, which goes beyond the multi-method options used by Verd and López (2008).

In addition, our work follows the third alternative proposed by Fielding (2012) —the integration of data from qualitative and quantitative methods —, but it also utilizes computer technology for processing the gathered information, which is the most relevant novelty. Most fundamentally, this integration allows us to leverage what is called the “challenge of humanization of virtual learning scenarios”: the search for ways of transmitting common human values in an increasingly globalized and technological world (Bringle & Clayton 2020: 46).

Instead of applying a mixed-methods approach to the implementation stage of Service-Learning, we employed it in the evaluation of students’ experiences, that is, as a source of feedback for instructors. To successfully conduct this process, beyond familiarity with virtual contexts —which is inherent for all UNED students due to its distance learning model—participants require specific skills to use this tool effectively. These include external moderation, the use of a facilitation script (Stancanelli, 2010), recording and interpreting information, and analyzing interaction (Watson et al., 2006), all of which demand significant effort from the research team —comparable to that involved in organizing video calls (Richard et al., 2021)— in terms of planning and execution.

As described in the methodology section, NVivo software was used to process the data obtained from the focus groups, ensuring the adequate handling of aggregation issues when integrating results from quantitative and qualitative sources, as suggested by Andrew et al. (2008). From a methodological perspective, however, the results of our research must be interpreted considering potential limitations. One such limitation is the potential for volunteer bias, as most students involved in the Service-Learning projects completed the questionnaires and participated in the focus groups as an integral part of their full engagement with the experience. Consequently, the findings may primarily reflect the perceptions of individuals who were already sufficiently motivated to participate actively.

Additionally, there is a bias associated with online data collection, arising from interactional constraints in virtual focus groups. Factors such as connectivity issues, turn-taking management, and the absence of face-to-face contact may have restricted spontaneity and the depth of contributions.

Moreover, while the integration of qualitative and quantitative analyses within a mixed-data panel strengthened internal validity, the sample size does not support generalizable inferences to the wider student population. The results should therefore be understood as indicative and contextualized within the specific framework of these projects and the university in which they were developed. Despite these limitations, our findings support the positive implementation of virtual Service-Learning, not only as an emergency response in the post-pandemic context but as an emerging and consolidated educational modality capable of being stably integrated into both virtual and campus-based university environments.

The results obtained confirm the findings of Cabero-Almenara and Palacios-Rodríguez (2021) in two ways: (1) the online delivery of activities provides students with genuine learning opportunities, and (2) the e-assessment approach used in this study makes it possible to analyze the effectiveness of the applied methodology, as well as the resources and organizational structure employed, in line with the observations of Marcano et al. (2020).

It is worth noting that no contradictory results were found between the questionnaires and the focus groups. In both cases, students expressed a high level of satisfaction with their participation in the experience and recommended its application to other courses and degree programs. Furthermore, given the heterogeneity of UNED’s student body, we believe there are no institutional or cultural factors that might limit the generalizability of this type of experience, aside from the requirement that, as it is conducted entirely online, students must have access to an internet connection.

Moreover, the fact that the evaluated projects were linked to several Sustainable Development Goals (SDGs) enabled students to develop critical awareness and become familiar with specific social values, thereby aligning with the overarching aim of acquiring knowledge about the SDGs that is also beneficial for society. This is consistent with Blanco-Cano and García-Martín (2021), who emphasize the importance of Service-Learning as a pedagogical methodology. Therefore, we argue that such S-L experiences can help formalize public policies aimed at disseminating values related to the implementation of the 2030 Agenda among higher education students, thus contributing to their values-based education.

Furthermore, students reported feeling like agents of social change within the projects, as they proposed indicators to evaluate them, thereby reinforcing their sense of empowerment to participate in the more efficient management of public resources allocated by institutions—in this case, Madrid City Council—to address social needs. They also became more aware of the existence of these needs and their impact on significant segments of society.

For all these reasons, based on the experience gained through these projects, we believe that the mixed-methods evaluation approach we employed can be applied to other online courses, provided that, when designing the experiences, the community service component is structured in a way that does not require physical presence at the collaborating institutions. From our perspective, this would be the only limiting factor for implementing similar projects, as those we carried out involved students from anywhere in the world, as long as they had internet access. Besides, the evaluation system used (Google Forms and NVivo) enables the processing of data from larger populations with no significant difficulty.

Conclusion

The main objective of this research was to demonstrate the feasibility and value of evaluating vS-L experiences using a mixed quantitative–qualitative method. While some studies have assessed S-L projects, few have done so in fully online contexts, and even fewer have combined online questionnaires with online focus groups for their evaluation. Although online focus groups are relatively common in business and marketing research, their use in education remains limited.

Our study aimed not only to confirm the relevance of evaluating fully online S-L projects but also to demonstrate their viability. It shows that employing a mixed-methods approach is more enriching than using quantitative or qualitative instruments alone, as it enables triangulation of results from both perspectives. This work also illustrates how online focus groups can extend beyond their traditional role in marketing to serve as a valuable research tool in higher education.

This research confirms that S-L, developed here within an online university such as UNED, is effective in fostering students’ civic attitudes, critical thinking skills, and awareness of selected SDGs. It also improves their understanding of the efficient use of public resources dedicated to socially oriented programs, contributing to their development as responsible citizens.

Results obtained through this mixed-methods approach revealed high levels of student satisfaction and confirmed that vS-L projects significantly improved their training in indicator-based assessment. Students reported feeling actively involved in public accountability processes and expressed that such methodology should be integrated into other courses. They described the experience as enriching, complementing their theoretical training with practical insights. Despite the added workload, they considered the projects well-designed, would not remove any activities, and emphasized that these initiatives helped them approach their studies from a more applied and professional perspective.

The mixed assessment model provided deeper insights into students’ experiences, showing how such initiatives can transform perceptions and foster a more critical, professionally oriented outlook. These findings support vS-L as an effective tool for students in non-face-to-face universities to strengthen knowledge and skills relevant to the professional world.

From a teaching perspective, using a mixed-methods assessment improved understanding of how universities can engage students beyond theoretical learning, fostering positive attitudes toward S-L. The method confirmed satisfaction with participation, both in terms of academic training and contribution to Madrid City Council, reinforcing students’ perception of themselves as active contributors.

Finally, our research demonstrates that online focus groups can be successfully applied in educational contexts and need not remain confined to fields such as marketing or healthcare. Future research will focus on developing designs for online questionnaires and focus groups to evaluate other educational experiences, whether based on different pedagogical approaches or implemented in contexts that are not fully virtual, unlike UNED.

Recommendations

In light of the findings from our research, we recommend using mixed methods for evaluating virtual Service-Learning projects. The combination of questionnaires (quantitative) and focus groups (qualitative) enables the triangulation of results, enriching the contributions of participating students and informing the development of future projects.

Moreover, in the context of distance learning, the use of a synchronous tool such as focus groups makes it possible to “put a face” to project participants—albeit virtually—and facilitates more dynamic interaction than that offered by questionnaire responses alone. Therefore, the use of both methods significantly enhances the evaluation of these experiences and can be meaningfully applied to similar research endeavors.

Limitations

The potential limitations of implementing S-L projects online —particularly the lack of face-to-face interaction among participants and with teaching staff— can be largely mitigated through ongoing support, tutoring, and virtual meetings via videoconferencing tools. These meetings should include faculty, representatives of the partner institutions receiving the service, and the students.

Conducting focus group evaluations online, as in our projects, should not be considered a limitation if sessions are led by a skilled moderator who can guide the discussion toward the most relevant evaluation aspects. This requires prior planning of the main dimensions around which the dialogue will revolve. Moreover, the online format of S-L projects allows student participation regardless of geographic location, provided they have internet access, thereby giving these experiences a broader, more global scope.

Ethics Statements

The studies involving human participants were reviewed and approved by the National University for Distance Learning (UNED). The participants provided their written informed consent to participate in this study.

This research has been granted by:

The University Institute for Distance Learning of the UNED to M.C. González-Rabanal (https://www2.uned.es/bici/Curso2020-2021/210517/30-0sumario.htm)

The UNED-Banco Santander Research, Transfer of Knowledge and Divulgation Award, in the Transfer category, in 2020 (https://www2.uned.es/bici/Curso2019-2020/200727/bici40completo.pdf)

Additionally, we are grateful to the General Directorate for Innovation and Social Strategy of the Madrid City Council.

Conflict of Interest

The authors declare that they have no competing interests.

Funding

This study received funding from the University Institute for Distance Learning of the UNED and the UNED-Banco Santander Research Award, Transfer category, 2020.

Generative AI Statement

No generative AI tools were used in the preparation or writing of this work.

Declaration of Interest Statement

The authors declare that they have no competing interests.

Authors Contributions Statement

Although the article is the joint result of the work of both authors, who have drafted and reviewed its entire content, in more detail, the contribution of each of them has been as follows:González-Rabanal: Conceptualization, ideas, formulation or evolution of overarching research goals and aims; design of methodology; supervision, critical revision of manuscript; review and editing; visualization; project administration; technical or material support; final approval, supervision; acquisition of the financial support for the project leading to this publication. Acevedo-Blanco: Drafting manuscript; application of formal techniques to analyze or synthesize study data; development of methodology; creation of models conducting a research and investigation process, specifically performing the experiments, or data/evidence collection; data analysis/interpretation.